This Blog is Blinding You Less

May 09, 2010 3:48 AM by Daniel Chambers (last modified on May 09, 2010 3:54 AM)

I’m a software developer (a professional one now! I graduated in April), not a designer, so when I’d finished designing the site I was surprised at how good it did look. However, it came as little surprise that apparently the site was causing Rob Conery’s cones to beat his rods, and therefore it should be burned alive. When receiving such brusque criticism, one may wish to send back an equally abrupt response, however in this case the criticism was justified, and therefore instead of starting a flame war I considered the criticism on its merits (and I’m sure Rob meant his comments humorously). Rob also took the time to write a post on his blog detailing his grievances and making some suggestions.

It turned out that half of his problem was caused by him viewing the site on a Mac that didn’t have the font I use for the text on this site (Segoe UI). I had assumed most people would have Segoe UI by now, as it is packaged with Vista, 7, Office 2007, Leopard (and I assume Snow Leopard too). However, upon further investigation it seems that very few people have it installed (around 50% on PC, and probably less on Mac). This is what Rob saw, versus what I saw in my browser on Windows 7:

What Rob Conery saw (left) versus what I saw (right)

Looking at the differences there, I really can’t say I blame him for hating the site. However, even with the correct font there’s still a few readability epic fails in there, in particular the blue text on the black background. That code styling had been the styling I'd liked and worked in in my IDE for over five years, so I guess I was used to it and didn’t notice.

I did some reading about typography and layout, however, I didn’t particularly want to replace my CSS with a Blueprint base, as it’d make me redo a lot of tedious annoying CSS work. I figured I could pretty easily fix the main pain points with the readability of the site by making some small key changes. I read that for high contrast colour schemes like the one used on this site, one needs to use a bigger line spacing than normal. I also read about good fonts to roll back to when clients are missing the main one. In addition, I found a new code colour scheme that I liked, an adaptation of one made by my friend Dwain Bunker. Here is the result of my changes:

The new site style (left) versus the old site style (right)

As you can see, I’ve shrunk the font size slightly and increased the line height. The font size had to be shrunk since I was using a font size that works in Segoe UI but was not well supported by the fonts I rolled back to (hence the travesty that Rob had to see). I’m now using a font size that all fonts seem to be able to deal with. The main font is also a slightly darker shade than it was previously. I’ve also changed the code style significantly. Gone is the blue on black, to a more purple-blue colour that is easier to read. The lime green has been replaced with white. (I’ve also started using this style in my IDE). However, we still need to see what happens when the user doesn’t have Segoe UI installed (other than Dogbert bazookaing your old XP computer (upgrade to Windows 7 now, damnit!), or a Terminator terminating your Mac):

The new site style (left) versus the new font rollback site style (right)

As you can see, there’s very little difference between the normal font (Segoe UI) and the rollback font (Tahoma). I still think the normal font (Segoe UI) is much better; it has better kerning and slightly wider letters. If you don’t have Tahoma (old Macs may not have it), I roll back further to Geneva (a Mac font), which is apparently similar to Tahoma.

Hopefully, these changes have addressed the main pain points with the readability of this site. Of course, some people may just plain dislike high contrast colour schemes, but you can’t please everybody.

Deep Inside ASP.NET MVC 2 Model Metadata and Validation

April 14, 2010 5:33 PM by Daniel Chambers (last modified on April 14, 2010 6:15 PM)

One of the big ticket features of ASP.NET MVC 2 is its ability to perform model validation at the model binding stage in the controller. It also has the ability to store metadata about your model itself (such as what properties are required ones, etc) and therefore can be more intelligent when it automatically renders an HTML interface for your data model.

Unfortunately, the way ASP.NET MVC 2 handles validation (in the controller) is at odds with my preferred way to handle validation (in the business layer, with validation errors batched and thrown up as exceptions back to the controller. I used xVal). In order to understand ASP.NET MVC 2’s validation I wanted to know how it worked, not just how to use it. Unfortunately, at time of writing, there seemed to be very few sources of information that described the way MVC 2’s model metadata and validation worked at a high, architectural level. The ASP.NET MVC MSDN class documentation is pretty horrible (it’s anaemic, in terms of detail). Sure, there are blog articles on how to use it, but none (that I could find, anyway) on how it really all works together under the covers. And if you don’t know how it works, it can be quite difficult to know how to use it most effectively.

To resolve this lack of documentation, this blog post attempts to explain the architecture of the ASP.NET MVC 2 metadata and validation features and to show you, at a high level, how they work. As I am not a member of the MVC team at Microsoft, I don’t have access to any architecture documentation they may have, so keep in mind everything in this blog was deduced by reading the MVC source code and judicious application of .NET Reflector. If I’ve made any mistakes, please let me know in the comments.

Model Metadata

ASP.NET MVC 2 has the ability to create metadata about your data model and use this for various tasks such as validation and special rendering. Some examples of the metadata that it stores are whether a property on a data model class is required, whether it’s read only, and what its display name is. As an example, the “is required” metadata would be useful as it would allow you to automatically write HTML and JavaScript to ensure that the user enters a value in a textbox, and that the textbox’s label is appended with an asterisk (*) so that the user knows that it is a required field.

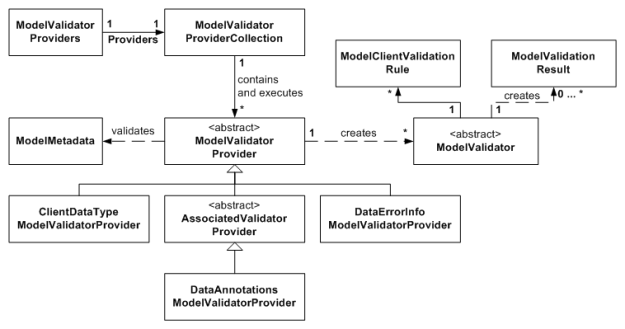

Figure 1. ModelMetadata and Providers Class Diagram

The ASP.NET MVC Model Metadata system stores metadata across many instances of the ModelMetadata class (and subclasses). ModelMetadata is a recursive data structure. The root ModelMetadata instance stores the metadata for the root data model object type, and also stores references to a ModelMetadata instance for each property on that root data model type. Figure 1 shows visual example of this for an example “Book” data model class.

Figure 2. Model Metadata Recursive Data Structure Diagram (note that not all properties on ModelMetadata are shown)

As you can see in Figure 2, there is a root ModelMetadata that describes the Book instance. You’ll notice that its ContainerType and PropertyName properties are null, which indicates that this is the root ModelMetadata instance. This root ModelMetadata instance has a Properties property that provides lazy-loaded access to ModelMetadata instances for each of the Book type’s properties. These ModelMetadata instances do have ContainerType and PropertyName set, because since they are for properties, they have a container type (ie. Book) and a name (ie. Title).

So how do we get instances of ModelMetadata for a data model, and how is the model’s metadata divined in the first place? This is where ModelMetadataProvider classes come in (see Figure 1). ModelMetadataProvider classes are able to create ModelMetadata instances. However, ModelDataMetadataProvider itself it is an abstract class, and delegates all its functionality to subclasses. The DataAnnotationsModelMetadataProvider is a implementation that is able to create model metadata based off the attributes in the System.ComponentModel.DataAnnotations namespace that are applied to data model classes and their properties. For example, the RequiredAttribute is used to determine whether a property is required or not (ie. it causes the ModelMetadata.IsRequired property to be set).

You’ll notice that the DataAnnotationsModelMetadataProvider does not inherit directly from ModelMetadataProvider (see Figure 1). Instead, it inherits from the AssociatedMetadataProvider abstract class. The AssociatedMetadataProvider class actually performs the getting of the attributes off the class and its properties by using an ICustomTypeDescriptor behind the scenes (which turns out to be the internal AssociatedMetadataTypeTypeDescriptor class, which is constructed by the AssociatedMetadataTypeTypeDescriptionProvider class). The AssociatedMetadataProvider then delegates the actual responsibility of building a ModelMetadata instance using the attributes it found to a subclass. The DataAnnotationsModelMetadataProvider inherits this responsibility.

As you can see, the whole metadata-creation system is very modular and allows you to generate model metadata in any way you want by just using a different ModelMetadataProvider class. The Provider class that the system uses by default is set by creating an instance of it and putting it in the static property ModelMetadataProviders.Current. By default, an instance of DataAnnotationsModelMetadataProvider is used.

Model Validation

ASP.NET MVC 2 includes a very modular and powerful validation system. Unfortunately, it’s very coupled to ASP.NET MVC as it uses ControllerContexts throughout itself. This means you can’t move its use into your business layer; you have to keep it in the controller. The modularity and power comes with a trade-off: architectural complexity. The validation system architecture contains quite a lot of indirection, but when you step through it you can see the reasons why the indirection exists: for extensibility.

Figure 3 shows an overview of the validation system architecture. Key to the system are ModelValidators, which when executed, are able to validate a part of the model in a specific way and return ModelValidationResults, which describe any validation errors that occurred with a simple text message. ModelValidators also have a collection of ModelClientValidationRules, which are simple data structures that represent the client-side JavaScript validation rules to be used for that ModelValidator.

ModelValidators are created by ModelValidatorProvider classes when they are asked to validate a particular ModelMetadata object. Many ModelValidatorProviders can be used at the same time to provide validation (ie. return ModelValidators) on a single ModelMetadata. This is illustrated by the ModelValidatorProviders.Providers static property, which is of type ModelValidatorProviderCollection. This collection, by default, holds instances of the ClientDataTypeModelValidatorProvider, DataAnnotationsModelValidatorProvider and DataErrorInfoModelValidatorProvider classes. This collection has a method (GetValidators) that allows you to easily execute all contained ModelValidatorProviders and get all the returned ModelValidators back. In fact, this is what is called when you call ModelMetadata.GetValidators.

The DataAnnotationsModelValidatorProvider is able to create ModelValidators that validate a model held in a ModelMetadata object by looking at System.ComponentModel.DataAnnotations attributes that have been placed on the data model class and its properties. In a similar fashion to the DataAnnotationsModelMetadataProvider, the DataAnnotationsModelValidatorProvider leaves the actual retrieving of the attributes from the data types to its base class, the AssociatedValidatorProvider.

The ClientDataTypeModelValidator produces ModelValidators that don’t actually do any server-side validation. Instead, the ModelValidators that it produces provide the ModelClientValidationRules needed to enforce data typing (such as numeric types like int) on the client. Obviously no server-side validation is needed to be done for data types since C# is a strongly-typed language and only an int can be in an int property. On the client-side, it is quite possible for the user to type a string (ie “abc”) into a textbox that requires an int, hence the need for special ModelClientValidationRules to deal with this.

The DataErrorInfoModelValidatorProvider is able to create ModelValidators that validate a data model that implements the IDataErrorInfo interface.

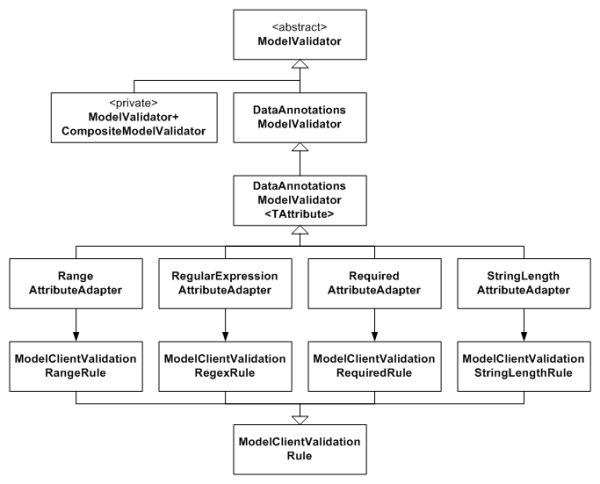

You may have noticed that ModelValidator is an abstract class. ModelValidator delegates the actual validation functionality to its subclasses, and it’s these concrete subclasses that the different ModelValidatorProviders create. Let’s take a deeper look at this, with a focus on the ModelValidators that the DataAnnotationsModelValidatorProvider creates.

As you can see in Figure 4, there is a ModelValidator concrete class for each System.ComponentModel.DataAnnotations validation attribute (ie. RangeAttributeAdapter is for the RangeAttribute). The DataAnnotationsModelValidator provides the general ability to execute a ValidationAttribute. The generic DataAnnotationsModelValidator<TAttribute> simply allows subclasses to access the concrete ValidationAttribute that the ModelValidator is for through a type-safe property.

You’ll notice in Figure 4 how each DataAnnotationsModelValidator<TAttribute> subclass has a matching ModelClientValidationRule. Each of those subclasses (ie. ModelClientValidationRangeRule) represents the client-side version of the ModelValidator. In short, they contain the rule name and whatever data needs to go along with it (for example, for the ModelClientValidationStringLengthRule, the specific string length is set inside that object instance). These rules can be serialised out to the page so that the page’s JavaScript validation logic can hook up client-side validation. (See Phil Haack’s blog on this for more information).

The DataAnnotationsModelValidatorProvider implements an extra layer of indirection that allows you to get it to create custom ModelValidators for any custom ValidationAttributes you might choose to create and use on your data model. Internally it has a Dictionary<Type, DataAnnotationsModelValidationFactory>, where the Type of a ValidationAttribute is associated with a DataAnnotationsModelValidationFactory (a delegate) that can create a ModelValidator for the attribute type it is associated with. You can add to this dictionary by calling the static DataAnnotationsModelValidatorProvider.RegisterAdapterFactory method.

The CompositeModelValidator is a private inner class of ModelValidator and is returned when you call the static ModelValidator.GetModelValidator method. It is a special ModelValidator that is able to take a ModelMetadata class, validate it by using its GetValidators method, and validate its properties by calling GetValidators on each of the ModelMetadata instances returned for each model property. It collects all the ModelValidationResult objects returned by all the ModelValidators that it executes and returns them in one big bundle.

Putting Metadata and Validation to Use

Now that we’re familiar with the workings of the ASP.NET MVC 2 Metadata and Validation systems, let’s see how we can actually use them to validate some data. Note that you don’t need to actually cause validation to happen explicitly, as ASP.NET MVC will trigger it to happen during model binding. This section just shows how the massive system we’ve looked at is kicked into execution.

The Controller.TryValidateModel method is a good example of showing model metadata creation and model validation in action:

protected internal bool TryValidateModel(object model, string prefix)

{

if (model == null)

throw new ArgumentNullException("model");

ModelMetadata modelMetadata = ModelMetadataProviders.Current.GetMetadataForType(() => model, model.GetType());

ModelValidator compositeValidator = ModelValidator.GetModelValidator(modelMetadata, base.ControllerContext);

foreach (ModelValidationResult result in compositeValidator.Validate(null))

{

this.ModelState.AddModelError(DefaultModelBinder.CreateSubPropertyName(prefix, result.MemberName), result.Message);

}

return this.ModelState.IsValid;

}

The first thing we do in the above method is create the ModelMetadata for the root object in our model (which is the model object passed in as a method parameter). We then get a CompositeModelValidator that will internally get and execute all the ModelValidators for the validation of the root object type and all its properties. Finally, we loop over all the validation errors gotten from executing the CompositeModelValidator (which executes all the ModelValidators it fetched internally) and add each error to the controller’s ModelState.

The CompositeModelValidator really hides the actual work of getting and executing the ModelValidators from us. So let’s see how it works internally.

public override IEnumerable<ModelValidationResult> Validate(object container)

{

bool propertiesValid = true;

foreach (ModelMetadata propertyMetadata in Metadata.Properties)

{

foreach (ModelValidator propertyValidator in propertyMetadata.GetValidators(ControllerContext))

{

foreach (ModelValidationResult propertyResult in propertyValidator.Validate(Metadata.Model))

{

propertiesValid = false;

yield return new ModelValidationResult

{

MemberName = DefaultModelBinder.CreateSubPropertyName(propertyMetadata.PropertyName, propertyResult.MemberName),

Message = propertyResult.Message

};

}

}

}

if (propertiesValid)

{

foreach (ModelValidator typeValidator in Metadata.GetValidators(ControllerContext))

foreach (ModelValidationResult typeResult in typeValidator.Validate(container))

yield return typeResult;

}

}

First it validates all the properties on the object that is held by the ModelMetadata it was created with (the Metadata property). It does this by getting the ModelMetadata of each property, getting the ModelValidators for that ModelMetadata, then executing each ModelValidator and yield returning the ModelValidationResults (if any; there will be none if there were no validation errors).

Then, if the all the properties were valid (if there were no ModelValidationResults returned from any of the ModelValidators) it proceeds to validate the root ModelMetadata by getting its ModelValidators, executing each one, and yield returning any ModelValidationResults.

The ModelMetadata.GetValidators method that the CompositeModelValidator is using simply asks the default ModelValidatorProviderCollection to execute all its ModelValidatorProviders and returns all the created ModelValidators:

public virtual IEnumerable<ModelValidator> GetValidators(ControllerContext context)

{

return ModelValidatorProviders.Providers.GetValidators(this, context);

}

Peanut-Gallery Criticism

The only criticism I have is levelled against the Model Validation system that for some reason requires a ControllerContext to be passed around internally (see the ModelValidator constructor). As far as I can see, that ControllerContext is never actually used by any out of the box validation component, which leads me to question why it needs to exist. The reason I dislike it is because it prevents me from even considering using the validation system down in the business layer (as opposed to in the controller) without coupling the business layer to ASP.NET MVC. However, there may be a good reason as to why it exists that I am not privy to.

Conclusion

In this blog post we’ve addressed the problem of a serious lack of documentation that explains the inner workings and architecture of the ASP.NET MVC 2 Model Metadata and Validation systems (that existed at least at the time of writing). We’ve systematically deconstructed and looked at the architecture of the two systems and seen how the architecture enables you to easily generate metadata about your data model and validate it, and how it provides the flexibility to plug in, change and extend the basic functionality to meet your specific use case.

DigitallyCreated Utilities v1.0.0 Released

March 24, 2010 2:43 PM by Daniel Chambers (last modified on June 07, 2010 3:35 PM)

After a hell of a lot of work, I am happy to announce that the 1.0.0 version of DigitallyCreated Utilities has been released! DigitallyCreated Utilities is a collection of many neat reusable utilities for lots of different .NET technologies that I’ve developed over time and personally use on this website, as well as on others I have a hand in developing. It’s a fully open source project, licenced under the Ms-PL licence, which means you can pretty much use it wherever you want and do whatever you want to it. No viral licences here.

The main reason that it has taken me so long to release this version is because I’ve been working hard to get all the wiki documentation on CodePlex up to scratch. After all, two of the project values are:

- To provide fully XML-documented source code

- To back up the source code documentation with useful tutorial articles that help developers use this project

And truly, nothing is more frustrating than code with bad documentation. To me, bad documentation is the lack of a unifying tutorial that shows the functionality in action, and the lack of decent XML documentation on the code. Sorry, XMLdoc that’s autogenerated by tools like GhostDoc, and never added to by the author, just doesn’t cut it. If you can auto-generate the documentation from the method and parameter names, it’s obviously not providing any extra value above and beyond what was already there without it!

So what does DCU v1.0.0 bring to the table? A hell of a lot actually, though you may not need all of it for every project. Here’s the feature list grouped by broad technology area:

- ASP.NET MVC and LINQ

- Sorting and paging of data in a table made easy by HtmlHelpers and LINQ extensions (see tutorial)

- ASP.NET MVC

- HtmlHelpers

- TempInfoBox - A temporary "action performed" box that displays to the user for 5 seconds then fades out (see tutorial)

- CollapsibleFieldset - A fieldset that collapses and expands when you click the legend (see tutorial)

- Gravatar - Renders an img tag for a Gravatar (see tutorial)

- CheckboxStandard & BoolBinder - Renders a normal checkbox without MVC's normal hidden field (see tutorial)

- EncodeAndInsertBrsAndLinks - Great for the display of user input, this will insert <br/>s for newlines and <a> tags for URLs and escape all HTML (see tutorial)

- IncomingRequestRouteConstraint - Great for supporting old permalink URLs using ASP.NET routing (see tutorial)

- Improved JsonResult - Replaces ASP.NET MVC's JsonResult with one that lets you specify JavaScriptConverters (see tutorial)

- Permanently Redirect ActionResults - Redirect users with 301 (Moved Permanently) HTTP status codes (see tutorial)

- Miscellaneous Route Helpers - For example, RouteToCurrentPage (see tutorial)

- HtmlHelpers

- LINQ

- MatchUp & Federator LINQ methods - Great for doing diffs on sequences (see tutorial)

- Entity Framework

- CompiledQueryReplicator - Manage your compiled queries such that you don't accidentally bake in the wrong MergeOption and create a difficult to discover bug (see tutorial)

- Miscellaneous Entity Framework Utilities - For example, ClearNonScalarProperties and Setting Entity Properties to Modified State (see tutorial)

- Error Reporting

- Easily wire up some simple classes and have your application email you detailed exception and error object dumps (see tutorial)

- Concurrent Programming

- Unity & WCF

- WCF Client Injection Extension - Easily use dependency injection to transparently inject WCF clients using Unity (see tutorial)

- Miscellaneous Base Class Library Utilities

- SafeUsingBlock and DisposableWrapper - Work with IDisposables in an easier fashion and avoid the bug where using blocks can silently swallow exceptions (see tutorial)

- Time Utilities - For example, TimeSpan To Ago String, TzId -> Windows TimeZoneInfo (see tutorial)

- Miscellaneous Utilities - Collection Add/RemoveAll, Base64StreamReader, AggregateException (see tutorial)

DCU is split across six different assemblies so that developers can pick and choose the stuff they want and not take unnecessary dependencies if they don’t have to. This means if you don’t use Unity in your application, you don’t need to take a dependency on Unity just to use the Error Reporting functionality.

I’m really pleased about this release as it’s the culmination of rather a lot of work on my part that I think will help other developers write their applications more easily. I’m already using it here on DigitallyCreated in many many places; for example the Error Reporting code tells me when this site crashes (and has been invaluable so far), the CompiledQueryReplicator helps me use compiled queries effectively on the back-end, and the ReaderWriterLock is used behind the scenes for the Twitter feed on the front page.

I hope you enjoy this release and find some use for it in your work or play activities. You can download it here.

C# Using Blocks can Swallow Exceptions

March 05, 2010 3:37 AM by Daniel Chambers (last modified on March 05, 2010 3:49 AM)

While working with WCF for my part time job, I came across this page on MSDN that condemned the C# using block as unsafe to use when working with a WCF client. The problem is that the using block can silently swallow exceptions without you even knowing. To prove this, here’s a small sample:

public static void Main()

{

try

{

using (new CrashingDisposable())

{

throw new Exception("Inside using block");

}

}

catch (Exception e)

{

Console.WriteLine("Caught exception: " + e.Message);

}

}

private class CrashingDisposable : IDisposable

{

public void Dispose()

{

throw new Exception("Inside Dispose");

}

}

The above program will write “Caught exception: Inside Dispose” to the console. But where did the “Inside using block” exception go? It was swallowed by the using block! How this happens is more obvious when you unroll the using block into the try/finally block that it really is (note the outer braces that limit crashingDisposable’s scope):

{

CrashingDisposable crashingDisposable = new CrashingDisposable();

try

{

throw new Exception("Inside using block");

}

finally

{

if (crashingDisposable != null)

((IDisposable)crashingDisposable).Dispose(); //Dispose exception thrown here

}

}

As you can see, the “Inside using block” exception is lost entirely. A reference to it isn’t even present and the exception from the Dispose call is the one that gets thrown up.

So, how does this affect you in WCF? Well, when a WCF client is disposed it is closed, which may throw an exception. So if, while using your client object, you encounter an exception that escapes the using block, the client will be disposed and therefore closed, which could throw an exception that will hide the original exception. That’s just bad for debugging.

This is obviously undesirable behaviour, so I’ve written a construct that I’ve dubbed a “Safe Using Block” that stops the exception thrown in the using block from being lost. Instead, the safe using block gathers both exceptions together and throws them up inside an AggregateException (present in DigitallyCreated.Utilities.Bcl, but soon to be found in .NET 4.0) Here’s the above using block rewritten as a safe using block:

new CrashingDisposable().SafeUsingBlock(crashingDisposable =>

{

throw new Exception("Inside using exception");

});

When this code runs an AggregateException is thrown that contains both the “Inside using exception” exception and the “Dispose” exception. So how does this work? Here’s the code:

public static void SafeUsingBlock<TDisposable>(this TDisposable disposable, Action<TDisposable> action)

where TDisposable : IDisposable

{

try

{

action(disposable);

}

catch (Exception actionException)

{

try

{

disposable.Dispose();

}

catch (Exception disposeException)

{

throw new DigitallyCreated.Utilities.Bcl.AggregateException(actionException, disposeException);

}

throw;

}

disposable.Dispose(); //Let it throw on its own

}

SafeUsingBlock is defined as an extension method off any type that implements IDisposable. It takes an Action delegate, which represents the code to run inside the “using block”. Since the method uses generics, the Action delegate is handed the concrete type of IDisposable you created, not just an abstract IDisposable.

You can use a safe using block in a very similar fashion to a normal using block, except for one key thing: goto-style statements won’t work. So for example, you can’t use return, break, continue, etc inside the safe using block and expect it to affect the method outside. You must keep in mind that you’re writing inside a new anonymous method, not inside a code block.

SafeUsingBlock is part of DigitallyCreated Utilities, however, it is currently only available in the trunk repository, not as a part of a release. Once I’m done working on the documentation, I’ll put it out in a full release.

DigitallyCreated v4.0 Launched

February 14, 2010 3:03 PM by Daniel Chambers (last modified on February 14, 2010 3:33 PM)

After over a month of work (in between replaying Mass Effect and my part-time work at Onset), I’ve finally finished the first version of DigitallyCreated v4.0. DigitallyCreated has been my website for many many years and has seen three major revisions before this one. However, those revisions were only HTML layout revisions; v3.0 upgraded the site to use a div-based CSS layout instead of a table layout, and v2.0 was so long ago I don’t even remember what it upgraded over v1.0.

Version 4 is DigitallyCreated’s biggest change since its very first version. It has received a brand new look and feel, and instead of being static HTML pages with a touch of 2 second hacked-up PHP thrown in, v4.0 is written from the ground up using C#, .NET Framework 3.5 SP1, and ASP.NET MVC. Obviously, there’s no point re-inventing the wheel (ignoring the irony that this is a custom-made blog site), so DigitallyCreated use a few open-source libraries (.NET ones and JavaScript ones) in its makeup:

- DigitallyCreated Utilities – a few libraries full of lots of little utilities ranging from MVC HtmlHelpers, to DateTime and TimeZoneInfo utilities, to Entity Framework utilities and LINQ extensions, to a pretty error and exception emailing utility.

- DotNetOpenAuth – a library that implements the OpenID standard, used for user authentication

- XML-RPC.NET – a library that allows you to create XML-RPC web services (since WCF doesn’t natively support it)

- Unity Application Block – a Microsoft open-source dependency injection library (used to decouple the business and UI layers)

- xVal – a library that enables declarative, attribute-based, server-side & client-side form validation

- jQuery – the popular JavaScript library, without which JavaScript development is like pulling teeth without anaesthetic

- jQuery.Validate – an extension to jQuery that makes client-side form validation easier (used by xVal)

- openid-selector (modified) – the JavaScript that powers the pretty logos on the OpenID sign in page

- qTip – a jQuery extension that makes doing rich tooltips easier

- IE6Update – a JavaScript library that encourages viewers still on IE6 to upgrade to a browser that doesn’t make baby Jesus cry

- SyntaxHighlighter – a JavaScript library that handles the syntax highlighting of any code snippets I use

So what are some of the cool features that make v4.0 so much better? Firstly, I’ve finally got a real blogging system. Using an implementation of the MetaWeblog API (plus some small extras) I can use Windows Live Writer (which is awesome, FYI) to write my blogs and post them up to website. The site automatically generates RSS feeds for almost everything to do with blogs. You can get an RSS feed for all blogs, for all blogs in a particular category or tag, for all comments, for comments on a blog, or for all comments on all blogs in a particular category or tag. Thanks to ASP.NET MVC, that was really easy to do, since most of the feeds are simply a different “view” of the blog data. And finally, the blog system now allows people to comment on blog posts, a long needed feature.

The new blog system makes it so much more convenient for me to blog now. Previously, posting a blog was a chore: write the blog, put the HTML into a new file, tell the hacky PHP rubbish code about the new file (increment the total number of posts), manually update the RSS feed XML, and then upload all the changes via FTP. Now I just write the post in Live Writer and click publish.

The URLs that the blog post use are now slightly search engine optimised (ie. they use a slug, that effectively puts the post title in the URL). I’ve made sure that the old permalinks to blog posts still work for the new site. If you use an old URL, you’ll get permanently redirected to the new URL. Permalinks are a promise. :)

Other than the big blog system, there are some other cool things on the site. One is the fact you can sign in with your OpenID. Doing your own authentication is so 2006 :). From the user’s perspective it means that they don’t need to sign up for a new account just to comment on the blog posts. They can simply log in with their existing OpenID, which could be their Google or Yahoo account (or soon their Windows Live ID). If their OpenID provider supports it (and the user allows it), the site pulls some of their details from their OpenID, like their nickname, email, website, and timezone, so they don’t have to enter their details manually. I use the user’s email address to pull and display their Gravatar when they submit a comment. All the dates and times on the site are adjusted to match the logged in user’s time zone.

Another good thing that is that home page is no longer a waste of space. In v3.0, the home page displayed some out of date website news and other old information. Because uploading blog posts was a manual process, I couldn’t be bothered putting blog snippets on the home page, since that would have meant me doing it manually for every blog post. In v4.0, the site does it for me. It also runs a background thread, polls my Twitter feed, and pulls my tweets for display.

I’ve also trimmed a lot of content. The old DigitallyCreated had a lot of content that was ancient, never updated, and read like it had been written by a 15 year old (it had been!). I cut all that stuff. However, I kept the resume page, and jazzed it up with a bit of JavaScript so that it’s slish and sexy. It no longer shows you my life history back into high school by default. Instead, it shows you what you care about: the most recent stuff. The older things are still there, but are hidden by default and can be shown by clicking “show me more”-style JavaScript links.

Behind the scenes there’s an error reporting system that catches crashes and emails nice pretty exception object dumps to me, so that I know what went wrong, what page it occurred on, and when it happened so that I can fix it. And of course, how can we forget funny error pages.

Of course, all this new stuff is just the beginning of the improvements I’m making to the site. There are more features that I wanted to add, but I had to draw the release line somewhere, so I drew it here. In the future, I’m looking at:

- Not displaying the full blog posts in the blog post lists. Instead, I’ll have a “show me more”-style link that shows the rest of the post when you click it.

- A right-hand panel on the blog pages which will contain, in particular, a list of categories and a coloured tag cloud. Currently, the only way to browse tags and categories is to see one used on a post and to click it. That’s rubbish discoverability.

- “Reply to” in comments, so that people can reply to other comments. I may also consider a peer reviewed comment system where you can “thumbs-up” and “thumbs-down” comments.

- The ability to associate multiple OpenIDs with the same account here at DigitallyCreated. This could be useful for people who want to change their OpenID, or use a new one.

- A full file upload system, which can only be used by myself for when I want to upload a file for a friend to download that’s too big for email (like a large installer or something). The files will be able to be secured so that only particular OpenIDs can download them.

- An in-browser blog editing UI, so that I’m not tied to using Windows Live Writer to write blogs.

- Blog search. I might fiddle with my own implementation for fun, but search is hard, so I may end up delegating to Google or Bing.

In terms of deployment, I’ve had quite the saga, which is the main reason why the site is late in coming. Originally, I was looking at hosting at either WebHost4Life or DiscountASP.NET. DiscountASP.NET looked totally pro and seemed like it was targeted at developers, unlike a lot of other hosts that target your mum. However, WebHost4Life seemed to provide similar features with more bandwidth and disk space for half the price. Their old site had a few awards on it, which lent it credibility to me (they’ve since changed it and those awards have disappeared). So I went with them over DiscountASP.NET. Unfortunately, their services never worked correctly. I had to talk to support to get signed up, since I didn’t want to transfer my domain over to them (a common need, surely!). I had to talk to support to get FTP working. I had to talk to support when the file manager control panel didn’t work. The last straw for me was when I deployed DigitallyCreated, and I got an AccessViolationException complaining that I probably had corrupt memory. After talking to support, they fixed it for me and declared the site operational. Of course, they didn’t bother to go past the front page (and ignored the broken Twitter feed). All pages other than the front page were broken (seemed like the ASP.NET MVC URL routing wasn’t happening). I talked to support again and they spent the week making it worse, and at the end the front page didn’t even load any more.

So instead I discovered WinHost, who offer better features than WebHost4Life (though with less bandwidth and disk space), but for half the price and without requiring any yearly contract! I signed up with them today, and within a few hours, DigitallyCreated was up and running perfectly. Wonderful! They even offer IIS Manager access to IIS and SQL Server Management Studio access to the database. Thankfully, I risked WebHost4Life knowing that they have a 30-day money back guarantee, so providing they don’t screw me, I’ll get my money back from them.

Now that the v4.0 is up and running, I will start blogging again. I’ve got some good topics and technologies that I’ve been saving throughout my hiatus in January to blog about for when the site went live. DigitallyCreated v4.0 has been a long time coming, and I hope you like it, and now, thanks to comments, you can tell me how much it sucks!

Australian Internet Censorship: Conroy's Reply

January 07, 2010 2:00 PM by Daniel Chambers (last modified on March 09, 2010 9:32 AM)

Today I received Senator Conroy's reply to my letter that I wrote to him, in which I decried the mandatory Internet filter that Conroy wants to install at an ISP-level for all of Australia. In short, the letter was patronisingly and almost offensively generic. I realise that sending out "standard words" to letters is general policy, but that doesn't mean I agree with it. These politicians are supposed to be representing us; it's their job to address the concerns of the people they represent. And my concerns were anything but addressed.

Unsurprisingly, considering how far this farce has got already, Conroy effectively ignored the arguments, concerns and alternatives I highlighted in my letter and spewed his standard line of misleading half-truths and misrepresentations back at me. As I have discussed before, I consider half-truths a form of lying, so this makes Conroy's letter particularly distasteful.

You can read Conroy's reply letter by downloading the PDF I made of it. Note that I have left my scribbles on the pages, where I highlighted various inaccuracies, misrepresentations and misleading facts that he asserted as I read it.

Of course, I can't let this issue lie. The Internet is a massive part of my life and I can't stand by and watch Australians' access to it be so damaged by Conroy's folly. So I wrote a reply to Conroy, in which I debunk his letter and again present the facts. This is what I wrote:

Dear Minister,

RE: Your response letter titled “Cyber-safety and internet service provider filtering”

I received your reply to my letter in which I highlighted the serious issue I have with your campaign to mandatorily censor and filter the Australian Internet and was extremely disappointed. Your letter was obviously a generic response and failed to satisfactorily address most of the issues that I highlighted.

In your letter, you again assert that this filter is for the protection of children; however you ignore the fact that there is plenty of material (legally rated X18+) that the filter will not remove and therefore children will be exposed to inappropriate material anyway. You note that you will encourage ISPs to provide optional filtering of this material to families, but this just highlights the inconsistency of the ISP-level filter being mandatory, since the optional filter would need to be enabled for it to even start to serve its purpose.

You fail to justify why this filter is needed mandatorily for 100% of Australian adults. There is absolutely no need to make this filter mandatory, if the idea is to protect those adults that would be offended by the inadvertent exposure to RC material; these people would be able to simply opt in to an optional filter if they felt the need. My experience, and the experience of my family, is that one does not just easily and inadvertently “run into” inappropriate material on the Internet, thereby making this filter more of a liability than a benefit. This is emphasised by the fact that those who wish to circumvent the filter and see inappropriate material can do so easily.

You assert that the blocking of Refused Content (RC) material is a good thing, and in many cases, most notably child pornography, this is true. However, the RC rating covers many areas that are morally and legally grey. One should note that an issue that is morally and legally grey does not mean that it is necessarily unacceptable behaviour. For instance, 50 years ago homosexuality was regarded as morally grey, but now is acceptable behaviour. An example topic of a modern morally and legally grey area that the RC rating would mandatorily block is euthanasia, which incidentally, is legal in the Netherlands (link). If Australians are blocked from accessing this sort of material, how are we as a nation supposed to educate ourselves about the issues? You cannot do that for us, as in a democracy you only represent us; you do not dictate how we should think.

You again misrepresent the results of the Enex Testlab report by claiming that the URL filtering technology is 100% accurate. This is, at best, a half-truth. You notably fail to consider the 5% of pages that report says will be blocked incorrectly and the 20% of pages that should be blocked that will be let through. Not only is your misrepresentation twisting the truth, but it also serves to lull those Australians less informed than I into thinking that your filter is a panacea. You assert that you have “always said that filtering is not a silver bullet”, but yet you say that it is 100% accurate.

You also fail to address how it is acceptable that, because of the use of a blacklist, entire websites may be taken offline for all Australians mistakenly. You cannot assert that mistakes cannot happen, as we all know that mistakes can and do happen. Case in point, a country that you assert “enjoys” filtering: the United Kingdom. In 2008, the UK was blocked from accessing the website Wikipedia (a key Internet resource) because it was mistakenly added to their blocklists (link). This is evidence that such a filter will harm our access to valid websites, and this is far too high a price to pay in light of the existence other, less damaging, solutions to child protection on the Internet.

As a trained IT professional, I was insulted by your patronising and misleading metaphor that dismissed the valid 5-10% performance penalties that Australians will mandatorily pay if you implement the filter: “the impact on performance would be less than one seventieth of the blink of an eye”. Your use of this metaphor indicates that you do not understand that at the time scales that computers operate, 1/70 of an eye blink is an incredibly long time. Although this time period may not be grossly evident to Australians using websites, many new technologies are starting to use the web and the Internet as a medium for their operation (for example, web services) and these products operate at a computer time-scale, not a human time-scale, and their operations would be negatively affected by this.

In addition, in the IT industry, performance is an important factor in deciding what technologies to adopt; something that is 10% faster will be used over something that is not. By effectively wiping 10% off Australia’s Internet speeds in one broad stroke for questionable gains, you make us less competitive in the IT market than the rest of the world that does not implement such draconian mandatory filtering initiatives. Australia is already an expensive place to run Internet services (compared to countries like the USA), and this makes us only more unattractive as a place to foster and run Internet technologies.

The idea behind the filter, the protection of children, is an admirable goal; however, the filter is an extremely ineffective and potentially damaging way of addressing this issue. My previous suggestion, the voluntary installation of filter software on home computers, addresses the problem in a way that solves the problem as effectively as your filter, but without the censorship, performance and mandatory enforcement issues. You may argue that technically competent children may circumvent the home computer filter; however, the same is true of your ISP-level filter.

Another, less optimal solution, is to have ISP-level filtering, but to make it optional for those who wish to have it. However, this is less optimal than the home computer filter solution since it would be much more expensive to implement an ISP-level filter than to offer a free home computer filter. The Howard Government did just this, although they should have been more effective about educating families as to its existence and usage.

I hope this makes it clearer as to the fatal flaws in your mandatory ISP-level filter scheme and presents you with some viable alternative solutions. I would prefer to see my tax dollars spent more effectively and in a way that improves Australia for Australians, not weakens it.

Yours sincerely,

Daniel Chambers

Of course, Conroy is almost religious in his support of the filter, meaning that he will likely ignore this letter as blindly as he ignored my previous one. I think this quote fairly accurately describes Conroy at this point:

Nobody is more dangerous than he who imagines himself pure in heart, for his purity, by definition, is unassailable.

--James Baldwin

So, in addition to sending this letter to him, I will print a copy of my previous letter, Conroy's reply, and this letter and send it to Tony Smith, the Shadow Minister for Broadband, Communications and the Digital Economy along with a brief cover letter explaining my displeasure. Credit goes to burntsugar on Twitter for this idea. This is the cover letter:

Dear Sir,

I am writing to you to inform you of my extreme displeasure with the Labor party’s attempts to mandatorily censor and filter the Australian internet at an ISP-level. I have sent two letters to Senator Conroy and one to my local Member of Parliament (Anna Burke of Chisholm) expressing my views and suggesting viable alternatives. I have attached my letters to Senator Conroy and his reply to my first letter so that you are able to understand my concerns.

I am disappointed to see how little the Liberal party has argued against Conroy’s ineffective filter scheme. I hope that by informing you of my concerns, which are replicated by almost every person that I have discussed this issue with, you will assign a higher priority to fighting against this absurd waste of taxpayers’ dollars by voting against the introduction of this legislation in Parliament. Certainly, this issue will be a key point for me when deciding whom to vote for at the next election.

Yours sincerely,

Daniel Chambers

Hopefully, this will help kick the Liberals into gear when it comes to fighting Conroy's folly in Parliament.

As you can see, the fight to preserve the Australian Internet continues unabated. In this war, your silence is taken as acceptance of the filter, so please, if you haven't yet written to your local Member of Parliament, Senator Conroy and Tony Smith and told them that you find this censorship scheme abhorrent, take the time to do so. Your few hours now will help to save you much grief in the future. The only way to make our voices heard by the politicians is for us all to shout.

Also, spread the word about the filter around your social circles. I've been surprised at the number of people I've talked to that had no idea that this was even going on. The more people that know about this, the harder it will be for the politicians to slip this under the radar. So, keeping this in mind, and in the same fashion as my last blog on this issue, I will leave you with a pertinent quote:

I believe that ignorance is the root of all evil. And that no one knows the truth.

--Molly Ivins

Entity Framework, TransactionScope and MSDTC

January 01, 2010 2:00 AM by Daniel Chambers (last modified on June 16, 2011 2:05 PM)

Update: Please note that the behaviour described in this article only occurs when using SQL Server 2005. SQL Server 2008 (and .NET 3.5+) can handle multiple connections within a transaction without requiring MSDTC promotion.

I've been tightening up code on a website I'm writing for work, and as such I've been improving the transactional integrity of some of the code that talks to our database (written using Entity Framework). Namely, I've been using TransactionScope to create transactions at specific isolation levels to ensure that no weird concurrency issues can slip in.

TransactionScope is very powerful. It has the ability to maintain your transaction across two (or more) database connections (or at least the SQL Server database code that uses it does) or even after you close the connection to the database. This is done by promoting your transaction up to being a "distributed transaction" that is managed by the Microsoft Distributed Transaction Coordinator (MSDTC) when you start to use multiple connections, or close the connection that you currently have a transaction in. So, essentially, as soon as your transaction becomes something that SQL Server can't handle with its normal transaction, the transaction is palmed off to MSDTC to manage.

An MSDTC transaction comes at a performance price as the transaction is no longer a lightweight transaction managed by SQL Server internally, but a heavyweight MSDTC transaction that is much more powerful. So, if at all possible, we do not want to use MSDTC (unless, of course, we actually need it).

However, there is a funny (or not so funny, when you think about it) behaviour that Entity Framework exhibits that causes your innocent transaction to be promoted to being an MSDTC transaction. Consider this code:

using (TestDBEntitiesContext context = new TestDBEntitiesContext())

{

using (TransactionScope transaction = new TransactionScope())

{

var authors = (from author in context.Authors

select author).ToList();

int count = (from author in context.Authors

select author).Count();

transaction.Complete();

}

}

This looks like a pretty innocent bit of code and looks like it should not result in transaction promotion. By putting the lifetime of the transaction (its using block) inside the lifetime of the ObjectContext, we ensure that the transaction cannot outlive any connection used by the context and therefore cause a promotion to an MSDTC transaction.

However, this code causes a transaction promotion on line 8. You can see this by ensuring the MSDTC service ("Distributed Transaction Coordinator") is stopped and then waiting for the exception that will be thrown because the SqlConnection is unable to promote the transaction since MSDTC is not running.

Why does this occur? A bit of digging on MSDN comes up with this bit of innocuous documentation:

Promotion of a transaction to a DTC may occur when a connection is closed and reopened within a single transaction. Because Object Services opens and closes the connection automatically, you should consider manually opening and closing the connection to avoid transaction promotion.

By sticking some breakpoints in the code above, we can observe this behaviour in action. The connection (ObjectContext.Connection) is closed by default, is opened quickly for the first query then closed immediately, then opened again for the second query, then closed. This second connection that is opened causes the transaction promotion!

At first glance this seemed to me to be an inefficient way of handling the connection! It's not that uncommon that one would want to do more than one thing with the ObjectContext in sequence and having a connection opened and closed for each query seems really inefficient.

However, upon further thought, I realised the reason why the Entity Framework team does this is probably to cover the use case where you have a long-lived ObjectContext (unlike here where we create it quickly, use it, and then throw it away). If the ObjectContext is going to be around for a long time (perhaps you've got one hanging around supplying data to a WPF dialog) we don't want it to hog a connection for all that time (99% of the time it will be idling waiting for the user!).

However, this "feature" gets in our way when using the ObjectContext in the manner above. To change this behaviour you need to sack the ObjectContext from the connection management job and do it yourself:

using (TestDBEntitiesContext context = new TestDBEntitiesContext())

{

using (TransactionScope transaction = new TransactionScope())

{

context.Connection.Open();

var authors = (from author in context.Authors

select author).ToList();

int count = (from author in context.Authors

select author).Count();

transaction.Complete();

}

}

Notice on line 5 above we are now manually opening the connection ourselves. This ensures that the connection will be open for the duration of both our queries and will be closed when the ObjectContext goes out of scope at the end of its using block.

Using this technique we can avoid the accidental promotion of our lightweight database transaction to an heavy MSDTC transaction and thereby scrape back some lost performance.

Speeding up .NET Reflection with Code Generation

December 20, 2009 2:00 PM by Daniel Chambers

One of the bugbears I have with Entity Framework in .NET is that when you use it behind a method in the business layer (think AddAuthor(Author author)) you need to manually wipe non-scalar properties when adding entities. I define non-scalar properties as:

- EntityCollections: the "many" ends of relationships (think Author.Books)

- The single end of relationships (think Book.Author)

- Properties that participate in the Entity Key (primary key properties) (think Author.ID). These need to be wiped as the database will automatically fill them (identity columns in SQL Server)

If you don't wipe the relationship properties when you add the entity object you get an UpdateException when you try to SaveChanges on the ObjectContext.

You can't assume that someone calling your method knows about this Entity Framework behaviour, so I consider it good practice to wipe the properties for them so they don't see unexpected UpdateExceptions floating up from the business layer. Obviously, having to write

author.ID = default(int); author.Books.Clear();

is no fun (especially on entities that have more properties than this!). So I wrote a method that you can pass an EntityObject to and it will wipe all non-scalar properties for you using reflection. This means that it doesn't need to know about your specific entity type until you call it, so you can use whatever entity classes you have for your project with it. However, calling methods dynamically is really slow. There will probably be lots faster ways of doing it in .NET 4.0 because of the DLR, but for us still back here in 3.5-land it is still slow.

So how can we enjoy the benefits of reflection but without the performance cost? Code generation at runtime, that's how! Instead of using reflection and dynamically calling methods and setting properties, we can instead use reflection once, create a new class at runtime that is able to wipe the non-scalar properties and then reuse this class over and over. How do we do this? With the System.CodeDom API, that's how (maybe in .NET 4.0 you could use the expanded Expression Trees functionality). The CodeDom API allows you to literally write code at runtime and have it compiled and loaded for you.

The following code I will go through is available as a part of DigitallyCreated Utilities open source libraries (see the DigitallyCreated.Utilities.Linq.EfUtil.ClearNonScalarProperties() method).

What I have done is create an interface called IEntityPropertyClearer that has a method that takes an object and wipes the non-scalar properties on it. Classes that implement this interface are able to wipe a single type of entity (EntityType).

public interface IEntityPropertyClearer

{

Type EntityType { get; }

void Clear(object entity);

}

I then have a helper abstract class that makes this interface easy to implement by the code generation logic by providing a type-safe method (ClearEntity) to implement and by doing some boilerplate generic magic for the EntityType property and casting in Clear:

public abstract class AbstractEntityPropertyClearer<TEntity> : IEntityPropertyClearer

where TEntity : EntityObject

{

public Type EntityType

{

get { return typeof(TEntity); }

}

public void Clear(object entity)

{

if (entity is TEntity)

ClearEntity((TEntity)entity);

}

protected abstract void ClearEntity(TEntity entity);

}

So the aim is to create a class at runtime that inherits from AbstractEntityPropertyClearer and implements the ClearEntity method so that it can clear a particular entity type. I have a method that we will now implement:

private static IEntityPropertyClearer GeneratePropertyClearer(EntityObject entity)

{

Type entityType = entity.GetType();

...

}

So the first thing we need to do is to create a "CodeCompileUnit" to put all the code we are generating into:

CodeCompileUnit compileUnit = new CodeCompileUnit(); compileUnit.ReferencedAssemblies.Add(typeof(System.ComponentModel.INotifyPropertyChanging).Assembly.Location); //System.dll compileUnit.ReferencedAssemblies.Add(typeof(EfUtil).Assembly.Location); compileUnit.ReferencedAssemblies.Add(typeof(EntityObject).Assembly.Location); compileUnit.ReferencedAssemblies.Add(entityType.Assembly.Location);

Note that we get the path to the assemblies we want to reference from the actual types that we need. I think this is a good approach as it means we don't need to hardcode the paths to the assemblies (maybe they will move in the future?).

We then need to create the class that will inherit from AbstractEntityPropertyClearer and the namespace in which this class will reside:

//Create the namespace

string namespaceName = typeof(EfUtil).Namespace + ".CodeGen";

CodeNamespace codeGenNamespace = new CodeNamespace(namespaceName);

compileUnit.Namespaces.Add(codeGenNamespace);

//Create the class

string genTypeName = entityType.FullName.Replace('.', '_') + "PropertyClearer";

CodeTypeDeclaration genClass = new CodeTypeDeclaration(genTypeName);

genClass.IsClass = true;

codeGenNamespace.Types.Add(genClass);

Type baseType = typeof(AbstractEntityPropertyClearer<>).MakeGenericType(entityType);

genClass.BaseTypes.Add(new CodeTypeReference(baseType));

The namespace we create is the namespace of the utility class + ".CodeGen". The class's name is the entity's full name (including namespace) where all "."s are replaced with "_"s and PropertyClearer appended to it (this will stop name collision). The class that the generated class will inherit from is AbstractEntityPropertyClearer but with the generic type set to be the type of entity we are dealing with (ie if the method was called with an Author, the type would be AbstractEntityPropertyClearer<Author>).

We now need to create the ClearEntity method that will go inside this class:

CodeMemberMethod clearEntityMethod = new CodeMemberMethod(); genClass.Members.Add(clearEntityMethod); clearEntityMethod.Name = "ClearEntity"; clearEntityMethod.ReturnType = new CodeTypeReference(typeof(void)); clearEntityMethod.Parameters.Add(new CodeParameterDeclarationExpression(entityType, "entity")); clearEntityMethod.Attributes = MemberAttributes.Override | MemberAttributes.Family;

Counterintuitively (for C# developers, anyway), "protected" scope is called MemberAttributes.Family in CodeDom.

We now need to find all EntityCollection properties on our entity type so that we can generate code to wipe them. We can do that with a smattering of LINQ against the reflection API:

IEnumerable<PropertyInfo> entityCollections =

from property in entityType.GetProperties()

where

property.PropertyType.IsGenericType &&

property.PropertyType.IsGenericTypeDefinition == false &&

property.PropertyType.GetGenericTypeDefinition() ==

typeof(EntityCollection<>)

select property;

We now need to generate statements inside our generated method to call Clear() on each of these properties:

foreach (PropertyInfo propertyInfo in entityCollections)

{

CodePropertyReferenceExpression propertyReferenceExpression = new CodePropertyReferenceExpression(new CodeArgumentReferenceExpression("entity"), propertyInfo.Name);

clearEntityMethod.Statements.Add(new CodeMethodInvokeExpression(propertyReferenceExpression, "Clear"));

}

On line 3 above we create a CodePropertyReferenceExpression that refers to the property on the "entity" variable which is an argument that we defined for the generated method. We then add to the method an expression that invokes the Clear method on this property reference (line 4). This will give us statements like entity.Books.Clear() (where entity is an Author).

We now need to find all the single-end-of-a-relationship properties (like book.Author) and entity key properties (like author.ID). Again, we use some LINQ to achieve this:

//Find all single multiplicity relation ends

IEnumerable<PropertyInfo> relationSingleEndProperties =

from property in entityType.GetProperties()

from attribute in property.GetCustomAttributes(typeof(EdmRelationshipNavigationPropertyAttribute), true).Cast<EdmRelationshipNavigationPropertyAttribute>()

select property;

//Find all entity key properties

IEnumerable<PropertyInfo> idProperties =

from property in entityType.GetProperties()

from attribute in property.GetCustomAttributes(typeof(EdmScalarPropertyAttribute), true).Cast<EdmScalarPropertyAttribute>()

where attribute.EntityKeyProperty

select property;

We then can iterate over both these sets in the one foreach loop (by using .Concat) and for each property we will generate a statement that will set it to its default value (using a default(MyType) expression):

//Emit assignments that set the properties to their default value

foreach (PropertyInfo propertyInfo in relationSingleEndProperties.Concat(idProperties))

{

CodeExpression defaultExpression = new CodeDefaultValueExpression(new CodeTypeReference(propertyInfo.PropertyType));

CodePropertyReferenceExpression propertyReferenceExpression = new CodePropertyReferenceExpression(new CodeArgumentReferenceExpression("entity"), propertyInfo.Name);

clearEntityMethod.Statements.Add(new CodeAssignStatement(propertyReferenceExpression, defaultExpression));

}

This will generate statements like author.ID = default(int) and book.Author = default(Author) which are added to the generated method.

Now that we've generated code to wipe out the non-scalar properties on an entity, we need to compile this code. We do this by passing our CodeCompileUnit to a CSharpCodeProvider for compilation:

CSharpCodeProvider provider = new CSharpCodeProvider(); CompilerParameters parameters = new CompilerParameters(); parameters.GenerateInMemory = true; CompilerResults results = provider.CompileAssemblyFromDom(parameters, compileUnit);

We set GenerateInMemory to true, as we just want these types to be available as long as our App Domain exists. GenerateInMemory causes the types that we generated to be automatically loaded, so we now need to instantiate our new class and return it:

Type type = results.CompiledAssembly.GetType(namespaceName + "." + genTypeName); return (IEntityPropertyClearer)Activator.CreateInstance(type);

What DigitallyCreated Utilities does is keep a Dictionary of instances of these types in memory. If you call on it with an entity type that it hasn't seen before it will generate a new IEntityPropertyClearer for that type using the method we just created and save it in the dictionary. This means that we can reuse these instances as well as keep track of which entity types we've seen before so we don't try to regenerate the clearer class. The method that does this is the method that you call to get your entities wiped:

public static void ClearNonScalarProperties(EntityObject entity)

{

IEntityPropertyClearer propertyClearer;

//_PropertyClearers is a private static readonly IDictionary<Type, IEntityPropertyClearer>

lock (_PropertyClearers)

{

if (_PropertyClearers.ContainsKey(entity.GetType()) == false)

{

propertyClearer = GeneratePropertyClearer(entity);

_PropertyClearers.Add(entity.GetType(), propertyClearer);

}

else

propertyClearer = _PropertyClearers[entity.GetType()];

}

propertyClearer.Clear(entity);

}

Note that this is done inside a lock so that this method is thread-safe.

So now the question is: how much faster is doing this using code generation instead of just using reflection and dynamically calling Clear() etc?

I created a small benchmark that creates 10,000 Author objects (Authors have an ID, a Name and an EntityCollection of Books) and then wipes them with ClearNonScalarProperties (which uses the code generation). It does this 100 times. It then does the same thing but this time it uses reflection only. Here are the results:

| Average | Standard Deviation | |

|---|---|---|

| Using Code Generation | 466.0ms | 39.9ms |

| Using Reflection Only | 1817.5ms | 11.6ms |

As you can see, when using code generation, the code runs nearly four times as fast compared to when using reflection only. I assume that when an entity has more non-scalar properties than the paltry two that Author has this speed benefit would be even more pronounced.

Even though this code generation logic is harder to write than just doing dynamic method calls using reflection, the results are really worth it in terms of performance. The code for this is up as a part of DigitallyCreated Utilities (it's in trunk and not part of an official release at the time of writing), so if you're interested in seeing it in action go check it out there.

Keep Australia Free of Internet Censorship!

December 14, 2009 2:00 PM by Daniel Chambers

Today, Senator Conroy, Australian Minister for Broadband, Communications, and the Digital Economy declared that he would be putting in a law that requires a mandatory Internet censoring filter to be installed at the ISP-level. He also released the study on which he made his decision (the Enex Testlab Internet Service Provider Content Filtering Pilot Report).

However, the results in the study clearly show the filter is unworkable and cannot solve the problem of people accessing illegal and unwanted material online. Disregarding this, Senator Conroy is blithely pressing forward with getting it implemented.

As this fiasco has unfolded this year, I have sat back, signed two petitions, and assumed that when the results of the trials the government were performing on the feasibility of this filter came back, they would see that it is stupid and impossible and would drop the idea.

Now that the results are back in the form of the study report, Conroy is flying in the face of logic and implementing the filter anyway.

So enough is enough. I can't sit on my laurels any more and watch Australia go to hell in a handbasket. I want my voice heard. I decided to write to Senator Conroy and my local Member of Parliament personally, to declare my complete contempt for what they are trying to do. I didn't want to re-use one of the canned letters that various sources provide, since I thought that would diminish the value of the message I am trying to communicate.

Below is my letter to Senator Conroy, which I will mail to him in hard copy. I ask that you do not copy it verbatim and send it as your letter. However, you are more than welcome to use my sources and facts and reword its message as your own letter. In fact, I encourage you to do so. Note that in my letter I referred to a lot of sources and below these are marked with (link) for hyperlinks and (hover) for textual sources (hover over it with the mouse for the description), but in the actual printed letter they are done as footnotes. I wanted to source as much stuff as I could, so it didn't seem like I was just asserting stuff.

Dear Minister,

I was very much disturbed to find today that you have decided to go ahead with your mandatory Internet filtering initiative.

Although you declared that the ISP-based filter system is “100% accurate” (source), a look into the report that you commissioned shows that, in fact, 3% of legitimate websites will be erroneously censored (hover). This seems like a small percentage until you apply it to the total number of sites on the internet (233 million (source)); when you look at it like this, your filter scheme will incorrectly censor 7 million sites.

The same report states that 20% of inappropriate content will be let through the filter unblocked (hover). This shows that it is technically infeasible to censor the internet effectively and completely, which means that no matter what you do, the filter cannot supplant proper parental supervision and therefore the children that you are worried about are still at risk. If the idea is to protect Australian adults from accessing inappropriate materials, the same argument still applies.

Australian households are diverse; many of them do not have young children, so mandating a one-size-fits-all filter will not serve the public well. In addition, I do not believe that it is the Government’s role to parent my children for me, especially considering your approach will not work 100% and I must do what I would have had to do before: supervise my children on the Internet.

Even if families wish to protect their children from unsuitable content (a noble cause indeed), much more cost effective solutions already exist: for example, the Howard Government’s free home computer filtering software. A large argument against this technique is that skilled children can work around the software; however, as the Enex report indicates, this is still possible with the ISP-level filter, rendering it as flawed as home filters, but much more expensive.

If the filter’s purpose is, in fact, to deny access to illegal material that the tiny minority of deviant Australians want to look at (such as child pornography), the report also says that for every single filtering strategy there is a way around it (hover), rendering the filter useless at blocking anyone who genuinely wants to access the illegal material. The money spent on this scheme would be better spent funding the police so they can catch these deviants.

The report also declares that 80% of users surveyed said the filtering “either entirely or generally met their needs”. On the face of it, this may seem like a positive response in favour of the filtering system. However, I submit that this result is likely skewed in favour of filtering, as the survey was only completed by those who opted in to the filtering in the first place. Those who disapprove of the filtering (such as myself) are unlikely to have voluntarily signed themselves up for it and therefore are not represented in this survey.

The opponents of your filtering program are many: ISPs have protested against it (link), child protection groups such as “Save the Children” have cried out against it (link), and many many Australian citizens disagree with it, as is evidenced by the many petitions signed by Australian citizens (link & link), and the storm on Twitter today protesting the filter that occurred after you announced it (link) (remember that Twitter was used to get the message out about the Iranian elections (link), which highlights its importance in sourcing peoples’ opinions).

Your policy towards the transparency of the ACMA blacklist also disturbs me. You have indicated that you will not publicly display the contents of the ACMA blacklist, which is a worrying lack of transparency. In a democracy, the Government governs on behalf of the people and a cornerstone of this is accountability. How can the public hold the Government accountable for the contents of the blacklist if it is not in the public domain? I know you assure us that it will not be misused, but with all due respect, you will not be in Government forever. We need accountability mechanisms, if not for you, then for future Governments.

In conclusion, I strongly believe that your proposed ISP-based filter system is functionally ineffective, unwanted and a cost inefficient solution to the problem of inappropriate content on the Internet. The fact is that if it is used to protect children (or adults), it will not, since it is only 80% accurate and cannot replace parental supervision, which is 100% accurate. Also, if it is used to stop deviant adults accessing illegal material, it will not, since it can be worked around. The only way to protect children fully is through parental supervision (perhaps augmented by optional local computer filtering software), and the only way to stop deviants is to fund the police so they are able to do so.

I ask you, as you are an Australian leader who leads on my behalf, to please take my arguments into consideration and reassess your ISP-level filtering scheme.

Yours sincerely,

Daniel Chambers

I reworded it slightly for the version I will send to my local MP, Anna Burke, but that version is essentially the same.

I hope you agree with the points I have put across in my letter. I strongly encourage you to write a letter to your own MP and to Senator Conroy and let them know that you disapprove of the filtering scheme. At the very least, use a canned letter and send that, but I think what you say would be given more weight if you wrote your own.

Remember, what is decided next year when Conroy's law goes into Parliament will be binding. If it gets through, you will be censored. This is not a bad dream, and it will not go away if you ignore it quietly and trust others to get your message across for you. I will leave you with this very appropriate quote:

"The only thing necessary for the triumph of evil is for good men to do nothing"

-- Edmund Burke

ASP.NET MVC Model Binders + RegExs + LINQ == Awesome

December 10, 2009 2:00 PM by Daniel Chambers

I was working on some ASP.NET MVC code today and I created this neat little solution that uses a custom model binder to automatically read in a bunch of dynamically created form fields and project their data into a set of business entities which were returned by the model binder as a parameter to an action method. The model binder isn't particularly complex, but the way I used regular expressions and LINQ to identify and collate the fields I needed to create the list of entities from was really neat and cool.

I wrote a Javascript-powered form (using jQuery) that allowed the user to add and edit multiple items at the same time, and then submit the set of items in a batch to the server for a save. The entity these items represented looks like this (simplified):

public class CostRangeItem

{

public short VolumeUpperBound { get; set; }

public decimal Cost { get; set; }

}

The Javascript code, which I won't go into here as it's not particularly cool (just a bit of jQuery magic), creates form items that look like this:

<input id="VolumeUpperBound:0" name="VolumeUpperBound:0" type="text /> <input id="Cost:0" name="Cost:0" type="text" />

The multiple items are handled by adding more form fields and incrementing the number after the ":" in the id/name. So having fields for two items means you end up with this: