Only showing posts tagged with "C#"

DigitallyCreated Utilities Now Open Source

August 05, 2009 2:00 PM by Daniel Chambers

My part time job for 2009 (while I study at university) has been working at a small company called Onset doing .NET development work. Among other things, I am (with a friend who also works there) re-writing their website in ASP.NET MVC. As I wrote code for their website I kept copy/pasting in code from previous projects and thinking about ways I could make development in ASP.NET MVC and Entity Framework even better.

I decided there needed to be a better way of keeping all this utility code that I kept importing from my personal projects into Onset's code base in one place. I also wanted a place that I could add further utilities as I wrote them and use them across all my projects, personal or commercial. So I took my utility code from my personal projects, implemented those cool ideas I had (on my time, not on Onset's!) and created the DigitallyCreated Utilities open source project on CodePlex. I've put the code out there under the Ms-PL licence, which basically lets you do anything with it.

The project is pretty small at the moment and only contains a handful of classes. However, it does contain some really cool functionality! The main feature at the moment is making it really really easy to do paging and sorting of data in ASP.NET MVC and Entity Framework. MVC doesn't come with any fancy controls, so you need to implement all the UI code for paging and sorting functionality yourself. I foresaw this becoming repetitive in my work on the Onset website, so I wrote a bunch of stuff that makes it ridiculously easy to do.

This is the part when I'd normally jump into some awesome code examples, but I already spent a chunk of time writing up a tutorial on the CodePlex wiki (which is really good by the way, and open source!), so go over there and check it out.

I'll be continuing to add to the project over time, so I thought I might need some "project values" to illustrate the quality level that I want the project to be at:

- To provide useful utilities and extensions to basic .NET functionality that saves developers' time

- To provide fully XML-documented source code (nothing is more annoying than undocumented code)

- To back up the source code documentation with useful tutorial articles that help developers use this project

That's not just guff: all the source code is fully documented and that tutorial I wrote is already up there. I hate open source projects that are badly documented; they have so much potential, but learning how they work and how to use them is always a pain in the arse. So I'm striving to not be like that.

The first release (v0.1.0) is already up there. I even learned how to use Sandcastle and Sandcastle Help File Builder to create a CHM copy of the API documentation for the release. So you can now view the XML documentation in its full MSDN-style glory when you download the release. The assemblies are accompanied by their XMLdoc files, so you get the documentation in Visual Studio as well.

Setting all this up took a bit of time, but I'm really happy with the result. I'm looking forward to adding more stuff to the project over time, although I might not have a lot of time to do so in the near future since uni is starting up again shortly. Hopefully you find what it has got as useful as I have.

WCF Authentication at both Transport and Message Level

June 10, 2009 2:00 PM by Daniel Chambers

Let's say you've got a web service that exposes data (we could hardly say that you didn't! :D). This particular data always relates to a particular user; for example, financial transactions are always viewed from the perspective of the "user". If it's me, it's my transactions. If it's you, it's your transactions. This means every call to the web service needs to identify who the user is (or you can use a session, but let's put that idea aside for now).

However, lets also say that these calls are "on behalf" of the user and not from them directly. So we need a way of authenticating who is putting the call through on behalf of the user.

This scenario can occur in a user-centric web application, where the web application runs on its own server and talks to a business service on a separate server. The web application server talks on behalf of the user with the business server.

So wouldn't it be nice if we could do the following with the WCF service that enables the web application server to talk to the business server: authenticate the user at the message level using their username and password and authenticate the web application server at the transport level by checking its certificate to ensure that it is an expected and trusted server?

Currently in WCF the out-of-the-box bindings net.tcp and WSHttp offer authentication at either message level or the transport level, but not both. TransportWithMessageCredential security is exactly that: transport security (encryption) with credentials at the message level. So how can we authenticate at both the transport and message level then?

The answer: create a custom binding (note: I will focus on using the net.tcp binding as a base here). I found the easiest way to do this is to continue doing your configuration in the XML, but at runtime copy and slightly modify the netTcp binding from the XML configuration. There is literally one switch you need to enable. Here's the code on the service side:

01 | ServiceHost businessHost = new ServiceHost(typeof(DHTestBusinessService)); |

02 | |

03 | ServiceEndpoint endpoint = businessHost.Description.Endpoints[0]; |

04 | |

05 | BindingElementCollection bindingElements = endpoint.Binding.CreateBindingElements(); |

06 | |

07 | SslStreamSecurityBindingElement sslElement = bindingElements.Find<SslStreamSecurityBindingElement>(); |

08 |

09 | //Turn on client certificate validation |

10 | sslElement.RequireClientCertificate = true; |

11 |

12 | CustomBinding newBinding = new CustomBinding(bindingElements); |

13 | NetTcpBinding oldBinding = (NetTcpBinding)endpoint.Binding; |

14 | newBinding.Namespace = oldBinding.Namespace; |

15 | endpoint.Binding = newBinding; |

Note that you need to run this code before you Open() on your ServiceHost.

You do exactly the same thing on the client side, except you get the ServiceEndpoint in a slightly different manner:

1 | DHTestBusinessServiceClient client = new DHTestBusinessServiceClient(); |

2 |

3 | ServiceEndpoint endpoint = client.Endpoint; |

4 |

5 | //Same code as the service goes here |

You'd think that'd be it, but you'd be wrong. :) This is where it gets extra lame. You're probably attributing your concrete service methods with PrincipalPermission to restrict access based on the roles of the service user, like this:

1 | [PrincipalPermission(SecurityAction.Demand, Role = "MyRole")] |

This technique will start failing once you apply the above changes. The reason is because the user's PrimaryIdentity (which you get from OperationContext.Current.ServiceSecurityContext.PrimaryIdentity) will end up being an unknown, username-less, unauthenticated IIdentity. This is because there are actually two identities representing the user: one for the X509 certificate used to authenticate over Transport, and one for the username and password credentials used to authenticate at Message level. When I reverse engineered the WCF binaries to see why it wasn't giving me the PrimaryIdentity I found that it has an explicit line of code that causes it to return that empty IIdentity if it finds more than one IIdentity. I guess it's because it's got no way to figure out which one is the primary one.

This means using the PrincipalPermission attribute is out the window. Instead, I wrote a method to mimic its functionality that can deal with multiple IIdentities:

01 | private void AssertPermissions(IEnumerable<string> rolesDemanded) |

02 | { |

03 | IList<IIdentity> identities = OperationContext.Current.ServiceSecurityContext.AuthorizationContext.Properties["Identities"] as IList<IIdentity>; |

04 | |

05 | if (identities == null) |

06 | throw new SecurityException("Unauthenticated access. No identities provided."); |

07 |

08 | foreach (IIdentity identity in identities) |

09 | { |

10 | if (identity.IsAuthenticated == false) |

11 | throw new SecurityException("Unauthenticated identity: " + identity.Name); |

12 | } |

13 |

14 | IIdentity usernameIdentity = identities.Where(id => id.GetType().Equals(typeof(GenericIdentity))) |

15 | .SingleOrDefault(); |

16 | |

17 | string[] userRoles = Roles.GetRolesForUser(usernameIdentity.Name); |

18 |

19 | foreach (string demandedRole in rolesDemanded) |

20 | { |

21 | if (userRoles.Contains(demandedRole) == false) |

22 | throw new SecurityException("Access denied: authorisation failure."); |

23 | } |

24 | } |

It's not pretty (especially the way I detect the username/password credential IIdentity), but it works! Now, at the top of your service methods you need to call it like this:

1 | AssertPermissions(new [] {"MyRole"}); |

Ensure that your client is providing a client certificate to the server by setting the client certificate element in your XML config under an endpoint behaviour's client credentials section:

1 | <clientCertificate storeLocation="LocalMachine" |

2 | storeName="My" |

3 | x509FindType="FindBySubjectDistinguishedName" |

4 | findValue="CN=mycertficatename"/> |

Now, I mentioned earlier that the business web service should be authenticating the web application server clients using these provided certificates. You could use chain trust (see the Chain Trust and Certificate Authorities section of this page for more information) to accept any client that was signed by any of the default root authorities, but this doesn't really provide exact authentication as to who is allowed to connect. This is because any server that has a certificate that is signed by any trusted authority will authenticate fine! What you need is to create your own certificate authority and issue your own certificates to your clients (I covered this process in a previous blog titled "Using Makecert to Create Certificates for Development").

However, to get WCF to only accept clients signed by a specific authority you need to write your own certificate validator to plug into the WCF service. You do this by inheriting from the X509CertificateValidator class like this:

01 | public class DHCertificateValidator |

02 | : X509CertificateValidator |

03 | { |

04 | private static readonly X509CertificateValidator ChainTrustValidator; |

05 | private const X509RevocationMode ChainTrustRevocationMode = X509RevocationMode.NoCheck; |

06 | private const StoreLocation AuthorityCertStoreLocation = StoreLocation.LocalMachine; |

07 | private const StoreName AuthorityCertStoreName = StoreName.Root; |

08 | private const string AuthorityCertThumbprint = "e12205f07ce5b101f0ae8f1da76716e545951b22"; |

09 |

10 | static DHCertificateValidator() |

11 | { |

12 | X509ChainPolicy policy = new X509ChainPolicy(); |

13 | policy.RevocationMode = ChainTrustRevocationMode; |

14 | ChainTrustValidator = CreateChainTrustValidator(true, policy); |

15 | } |

16 |

17 | public override void Validate(X509Certificate2 certificate) |

18 | { |

19 | ChainTrustValidator.Validate(certificate); |

20 |

21 | X509Store store = new X509Store(AuthorityCertStoreName, AuthorityCertStoreLocation); |

22 | |

23 | store.Open(OpenFlags.ReadOnly); |

24 | X509Certificate2Collection certs = store.Certificates.Find(X509FindType.FindBySubjectDistinguishedName, certificate.IssuerName.Name, true); |

25 |

26 | if (certs.Count != 1) |

27 | throw new SecurityTokenValidationException("Cannot find the root authority certificate"); |

28 |

29 | X509Certificate2 rootAuthorityCert = certs[0]; |

30 | if (String.Compare(rootAuthorityCert.Thumbprint, AuthorityCertThumbprint, true) != 0) |

31 | throw new SecurityTokenValidationException("Not signed by our certificate authority"); |

32 |

33 | store.Close(); |

34 | } |

35 | } |

As you can see, the class re-uses the WCF chain trust mechanism to ensure that the certificate still passes chain trust requirements. But it then goes a step further and looks up the issuing certificate in the computer's certificate repository to ensure that it is the correct authority. The "correct" authority is defined by the certificate thumbprint defined as a constant at the top of the class. You can get this from any certificate by inspecting its properties. (As an improvement to this class, it might be beneficial for you to replace the constants at the top of the class with configurable options dragged from a configuration file).

To configure the WCF service to use this validator you need to set the some settings on the authentication element in your XML config under the service behaviour's service credential's client certificate section, like this:

1 | <authentication certificateValidationMode="Custom" |

2 | customCertificateValidatorType="DigitallyCreated.DH.Business.DHCertificateValidator, MyAssemblyName" |

3 | trustedStoreLocation="LocalMachine" |

4 | revocationMode="NoCheck" /> |

And that's it! Now you are authenticating at transport level as well as at message level using certificates and usernames and passwords!

This post was derived from my question at StackOverflow and the answer that I researched up and posted (to answer my own question). See the StackOverflow question here.

Programming to an Interface when using a WCF Service Client

June 09, 2009 3:00 PM by Daniel Chambers

Programming to an interface and not an implementation is one of the big object-oriented design principles. So, when working with a web service client auto-generated by WCF, you will want to program to the service interface rather than the client class. However, as it currently stands this is made a little awkward.

Why? Because the WCF service client class is IDisposable and therefore needs to be explicitly disposed after you've finished using it. The easiest way to do this in C# is to use a using block. A using block takes an IDisposable, and when the block is exited, either by exiting the block normally, or if you quit it early by throwing an exception or something similar, it will automatically call Dispose() on your IDisposable.

The problem lies in the fact that, although the WCF service client implements IDisposable, the service interface does not. This means you either have to cast to the concrete client type and call Dispose() or you have to manually check to see whether the service-interfaced object you posses is IDisposable and if it is then Dispose() it. That is obviously a clunky and error-prone way of doing it.

Here's the way I solved the problem. It's not totally pretty, but it's a lot better than doing the above hacks. Firstly, because you are programming to an abstract interface, you need to have a factory class that creates your concrete objects. This factory returns the concrete client object wrapped in a DisposableWrapper. DisposableWrapper is a generic class I created that aids in abstracting away whether or not the concrete class is IDisposable or not. Here's the code:

01 | public class DisposableWrapper<T> : IDisposable |

02 | { |

03 | private readonly T _Object; |

04 |

05 | public DisposableWrapper(T objectToWrap) |

06 | { |

07 | _Object = objectToWrap; |

08 | } |

09 |

10 | public T Object |

11 | { |

12 | get { return _Object; } |

13 | } |

14 |

15 | public void Dispose() |

16 | { |

17 | IDisposable disposable = _Object as IDisposable; |

18 | if (disposable != null) |

19 | disposable.Dispose(); |

20 | } |

21 | } |

The service client factory method that creates the service looks like this:

1 | public static DisposableWrapper<IAuthAndAuthService> CreateAuthAndAuthService() |

2 | { |

3 | return new DisposableWrapper<IAuthAndAuthService>(new AuthAndAuthServiceClient()); |

4 | } |

Then, when you use the factory method, you do this:

1 | using (DisposableWrapper<IAuthAndAuthService> clientWrapper = ServiceClientFactory.CreateAuthAndAuthService()) |

2 | { |

3 | IAuthAndAuthService client = clientWrapper.Object; |

4 |

5 | //Do your work with the client here |

6 | } |

As you can see, the factory method returns the concrete service client wrapper in a DisposableWrapper, but exposed as the interface because it the interface type as the generic type for DisposableWrapper. You call the factory method and use its resulting DisposableWrapper inside your using block. The DisposableWrapper ensures that the object it wraps, if it is indeed IDisposable, is disposed when it itself is disposed by the using block. You can get the client object out of the wrapper, but as the service interface type and not the concrete type, which ensures you are programming to the interface and not the implementation.

All in all, it's a fairly neat way to program against a service interface, while still retaining the ability to Dispose() the service client object.

Dynamic Queries in Entity Framework using Expression Trees

June 06, 2009 2:00 PM by Daniel Chambers (last modified on January 13, 2011 12:24 PM)

Most of the queries you do in your application are probably static queries. The parameters you set on the query probably change, but the actual query itself doesn't. That's why compiled queries are so cool, because you can pre-compile and reuse a query over and over again and just vary the parameters (see my last blog for more information).

But sometimes you might need to construct a query at runtime. By this I mean not just changing the parameter values, but actually changing the query structure. A good example of this would be a filter, where, depending on what the user wants, you dynamically create a query that culls a set down to what the user is looking for. If you've only got a couple of filter options, you can probably get away with writing multiple compiled queries to cover the permutations, but it only takes a few filter options before you've got a lot of permutations and it becomes unmanageable.

A good example of this is file searching. You can filter a list of files by name, type, size, date modified, etc. The user may only want to filter by one of these filters, for example with "Awesome" as the filename. But the user may also want to filter by multiple filters, for example, "Awesome" as the filename, but modified after 2009/07/07 and more than 20MB in size. To create a static query for each permutation would result in 16 queries (4 squared)!

My first foray into creating dynamic queries is a bit less ambitious than the above example, however. I have a scenario where I need to pull out a number of Tag objects from the database by their IDs. However, the number of the Tag objects needed is determined by the user. They may select 3 Tags, or they may select 6 Tags, or they may select 4 tags; it's up to them.

The most boring approach is, of course, to get each Tag out of the database individually with its own query (the "get each Tag individually" approach):

01 | IList<Tag> list = new List<Tag>(); |

02 | foreach (int tagId in WantedTagIds) |

03 | { |

04 | int localTagId = tagId; |

05 |

06 | Tag theTag = (from tag in context.Tag |

07 | where |

08 | tag.Account.ID == AccountId && |

09 | tag.ID == localTagId |

10 | select tag).First(); |

11 |

12 | list.Add(theTag); |

13 | } |

You could compile that query to make it run faster, but it's still a slow operation. If the user wants to get 6 Tags, you need to query the database 6 times. Not very cool.

This is where dynamic queries can step in. If the user asks for 3 Tags, you can generate a where clause that gets all three Tags in one go; essentially: tag.ID == 10 || tag.ID == 12 || tag.ID == 14. That way you get all three Tags in one query to the database. So, I wrote some generic type-safe code to perform exactly that: generating a where clause expression from a list of IDs so that a Tag with any of those IDs is retrieved.

To understand how I did this, you need to understand how the where clause in an LINQ expression works. It is easiest to understand if you look at the method-chain form of LINQ rather than the special C# syntax. It looks like this:

1 | IQueryable<Tag> tags = context.Tag.AsQueryable() |

2 | .Where(tag => tag.ID == 10); |

The Where method takes a parameter that looks like this: Expression<Func<Tag, bool>>. Notice how the Func delegate is wrapped in an Expression? This means that instead of creating an actual anonymous method for the Func delegate, the compiler will instead convert your lambda expression into an Expression Tree.

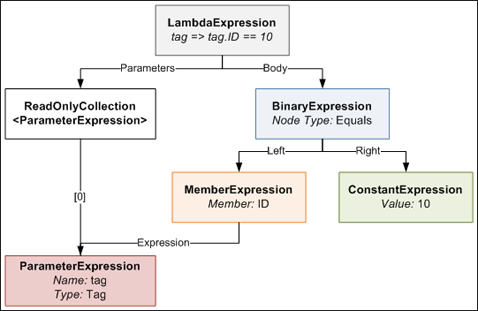

An Expression Tree is a representation of your expression in an object tree. Here is an object tree that shows the main objects in the expression tree generated by the compiler for the lambda expression in the above example's Where method:

The LambdaExpression has a collection of ParameterExpressions, which are the parameters on the left side of the => symbol in the code. The actual Body of the lambda is made up of a BinaryExpression of type Equals, whose Right side is a ConstantExpression that contains the value of 10, and whose Left side is a MemberExpression. A MemberExpression represents the access of the ID property on the tag parameter.

So if we wanted to represent a more complex expression such as:

1 | tag => tag.ID == 10 || tag.ID == 12 || tag.ID == 14 |

this is what the expression tree would look like. It looks a bit daunting, but computers are very good at trees, so writing code to generate such a tree is not too difficult with the help of a little recursion.

I defined a special utility method that allows you to create an expression tree like the one above that results in a Where expression that accepts a particular tag so long as its ID is in a certain set of IDs. The method is generic and reusable across anywhere where you need a Where filter that gets "this value, or this value, or this value... etc". The public method looks like this:

01 | public static Expression<Func<TValue, bool>> BuildOrExpressionTree<TValue, TCompareAgainst>( |

02 | IEnumerable<TCompareAgainst> wantedItems, |

03 | Expression<Func<TValue, TCompareAgainst>> convertBetweenTypes) |

04 | { |

05 | ParameterExpression inputParam = convertBetweenTypes.Parameters[0]; |

06 | |

07 | Expression binaryExpressionTree = BuildBinaryOrTree(wantedItems.GetEnumerator(), convertBetweenTypes.Body, null); |

08 | |

09 | return Expression.Lambda<Func<TValue, bool>>(binaryExpressionTree, new[] { inputParam }); |

10 | } |

It's stuffed full of generics which makes it look more complicated than it really is. Here's how you call it:

1 | List<int> ids = new List<int> { 10, 12, 14 }; |

2 | Expression<Func<Tag, bool>> whereClause = BuildOrExpressionTree<Tag, int>(wantedTagIds, tag => tag.ID); |

As I explain how it works, I suggest you keep an eye on the last expression tree diagram. The method defines two generic types, one called TValue which represents the value you are comparing, in this case the Tag class. The other generic type is called TCompareAgainst and is the type of the value you are comparing against, in this case int (because the Tag.ID property is an int).

You pass the method an IEnumerable<TCompareAgainst>, which in our case is an IEnumerable<int>, because we have a list of IDs we are comparing against.

The second parameter ("convertBetweenTypes") can be a bit confusing; let me explain. The expression we are defining for the Where clause takes a Tag and returns a bool (hence the Func<Tag, bool> typed expression). Since the set of values we are comparing against are ints, we can't just do an == between the Tag and an int. To be able to do this comparison, we need to somehow "convert" the Tag we receive into an int for comparison. This is where the second parameter comes in. It defines an Expression that takes a Tag and returns an int (or in generic terms takes a TValue and returns a TCompareAgainst). When you write tag => tag.ID, the compiler generates an Expression Tree that contains a MemberExpression that accesses ID on the tag ParameterExpression. This means wherever we need to do a Tag == int, we instead do a Tag.ID == int by substituting the Tag.ID MemberExpression generated in the place of the Tag. Here's a diagram that explains what I'm ranting about.

The main purpose of this method is to create the final LambdaExpression that the method returns. It does this by attaching the expression tree built by the BuildBinaryOrTree method (we'll get into this in a second) and the ParameterExpression from the convertBetweenTypes to the final LambdaExpression object.

The BuildBinaryOrTree method looks like this:

01 | private static Expression BuildBinaryOrTree<T>( |

02 | IEnumerator<T> itemEnumerator, |

03 | Expression expressionToCompareTo, |

04 | Expression expression) |

05 | { |

06 | if (itemEnumerator.MoveNext() == false) |

07 | return expression; |

08 |

09 | ConstantExpression constant = Expression.Constant(itemEnumerator.Current, typeof(T)); |

10 | BinaryExpression comparison = Expression.Equal(expressionToCompareTo, constant); |

11 |

12 | BinaryExpression newExpression; |

13 |

14 | if (expression == null) |

15 | newExpression = comparison; |

16 | else |

17 | newExpression = Expression.OrElse(expression, comparison); |

18 |

19 | return BuildBinaryOrTree(itemEnumerator, expressionToCompareTo, newExpression); |

20 | } |

It takes an IEnumerator that enumerates over the wantedItems list (from the BuildOrExpressionTree method), an expression to compare each of these wanted items to (which is the compiler-generated MemberExpression from BuildOrExpressionTreeMethod), and an expression from a previous recursion (starts off as null).

The method creates an Equals BinaryExpression that compares the expressionToCompareTo and the current itemEnumerator value. It then joins this in an OrElse BinaryExpression comparison with the expression from previous recursions. It then takes this new expression and passes it down to the next recursive call. This process continues until itemEnumerator is exhausted at which point the final expression tree is returned.

Once this returned expression tree is placed in its LambdaExpression by the BuildOrExpressionTree method, you end up with a pretty expression tree like this one shown previously. We can then use this expression tree in the where clause of a LINQ method chain query.

Here's the final "generated where clause" query in action:

01 | using (DHEntities context = new DHEntities()) |

02 | { |

03 | int[] wantedTagIds = new[] {12, 24, 1, 4, 32, 19}; |

04 |

05 | Expression<Func<Tag, bool>> whereClause = ExpressionTreeUtil.BuildOrExpressionTree<Tag, int>(wantedTagIds, tag => tag.ID); |

06 |

07 | IQueryable<Tag> tags = context.Tag.Where(whereClause); |

08 |

09 | IList<Tag> list = tags.ToList(); |

10 | } |

So how much better is this approach, which is decidedly more complex than the simple "get each Tag at a time" approach? Is it worth the effort? I performed some benchmarks similar to the ones I did in the last blog to find out.

In one benchmark run, I ran these queries, each a hundred times, each getting out the same 6 tags:

- The "get each Tag individually" query (uncompiled)

- The "get each Tag individually" query (compiled)

- The "generated where clause" query. The where clause was regenerated each time.

I then ran the benchmark 100 times so that I could get more reliable averaged values. These are the results I got:

| Average | Standard Deviation | |

|---|---|---|

| "Get Each Tag Individually" Query Loop (Uncompiled) | 3212.2ms | 40.2ms |

| "Get Each Tag Individually" Query Loop (Compiled) | 1349.3ms | 24.2ms |

| "Generated Where Clause" Query Loop | 197.8ms | 5.3ms |

As you can see, the Generated Where Clause approach is quite a lot faster than the individual queries. We can see compiling the Individual query helps, but not enough to beat the Generated Where Clause query, which is faster even though it is recompiled each time! (You can't precompile a dynamic query, obviously). The Generated Where Clause query is 6.8 times faster than the compiled Individual query and a whopping 16.2 times faster than the uncompiled Individual query.

Even though dynamic queries are lots harder than normal static queries, because you have to manually mess with Expression Trees, there are large payoffs to be had in doing so. When used in the appropriate place, dynamic queries are faster than static queries. They could also potentially make your code cleaner, especially in the case of the filter example I talked about at the beginning of this blog. So consider getting up to speed with Expression Trees. It's worth the effort.

Making Entity Framework as Quick as a Fox

June 04, 2009 2:00 PM by Daniel Chambers

Entity Framework is the new (as of .NET 3.5 SP1) ORM technology for the .NET Framework. ORM technologies are widely accepted as the "better" way of accessing relational databases, because they allow you to work with relational data as objects in the world of objects. However, ORM tech can be slower than writing manual SQL queries yourself. This can be seen in this blog that benchmarks Entity Framework versus LINQ to SQL and a manual SQLDataReader.

Hardware is cheap (compared to programmer labour, which is not) so getting a faster machine could be an effective strategy to counter performance issues with ORM. However, what if we could squeeze some extra performance out of Entity Framework with only a little effort?

This is where Compiled Queries come in. Compiled queries are good to use where you have one particular query that you use over and over again in the same application. A normal query (using LINQ) is passed to Entity Framework as an expression tree. Entity Framework translates it into a command tree that is then translated by a database-specific provider into a query against a database. It does this every time you execute the query. Obviously, if this query is in a loop (or is called often) this is suboptimal because the query is recompiled every time, even though all that's probably changed is the parameters in the query. Compiled queries ensure that the query is only compiled once, and the only thing that varies is the parameters.

I created a quick benchmark app to find out just how much faster compiled queries are against normal queries. I'll illustrate how the benchmark works and then present the results.

Basically, I had a particular non-compiled LINQ to Entities query which I ran 100 times in a loop and timed how long it took. I then created the same query, but as a compiled query instead. I ran it once, because the query is compiled the first time you run it, not when you construct it. I then ran it 100 times in a loop and timed how long it took. Also, before doing any of the above, I ran the non-compiled query once, because it seemed to take a long time for the very first operation using the Entity Framework to run, so I wanted that time excluded from my results.

The non-compiled query I ran looked like this:

1 | IQueryable<Transaction> transactions = |

2 | from transaction in context.Transaction |

3 | where |

4 | transaction.TransactionDate >= FromDate && |

5 | transaction.TransactionDate <= ToDate |

6 | select transaction; |

7 |

8 | List<Transaction> list = transactions.ToList(); |

As you can see, it's nothing fancy, just a simple query with a small where clause. This query returns 39 Transaction objects from my database (SQL Server 2005).

The compiled query was created like this:

01 | Func<DHEntities, DateTime, DateTime, IQueryable<Transaction>> |

02 | query; |

03 |

04 | query = CompiledQuery.Compile( |

05 | (DHEntities ctx, DateTime fromD, DateTime toD) => |

06 | from transaction in ctx.Transaction |

07 | where |

08 | transaction.TransactionDate >= fromD && |

09 | transaction.TransactionDate <= toD |

10 | select transaction); |

As you can see, to create a compiled query you pass your LINQ query to CompiledQuery.Compile() via a lambda expression that defines the things that the query needs (ie the Object Context (in this case, DHEntities) and the parameters used (in this case two DateTimes). The Compile function will return a Func delegate that has the types you defined in your lambda, plus one extra: the return type of the query (in this case IQueryable<Transaction>).

The compiled query was executed like this:

1 | IQueryable<Transaction> transactions = query.Invoke(context, FromDate, ToDate); |

2 |

3 | List<Transaction> list = transactions.ToList(); |

I ran the benchmark 100 times, collected all the data and then averaged the results:

| Average | Standard Deviation | |

|---|---|---|

| Non-compiled Query Loop | 534.1ms | 20.6ms |

| Compiled Query Loop | 63.1ms | 0.6ms |

The results are impressive. In this case, compiled queries are 8.5 times faster than normal queries! I've showed the standard deviation so that you can see that the results didn't fluctuate much between each benchmark run.

The use case I have for using compiled queries is doing database access in a WCF service. I expose a service that will likely be beaten to death by constant queries from an ASP.NET MVC webserver. Sure, I could get larger hardware to make the WCF service go faster, or I could simply get a rather massive performance boost just by using compiled queries.

Sexy C# Filter Code for RealDWG Database Navigation

May 31, 2009 2:00 PM by Daniel Chambers

I've been having to use RealDWG for my part time programming work at Onset to programmatically read DWG files (AutoCAD drawing files). I've been finding RealDWG a pain to learn, as AutoDesk's documentation seems to assume you're an in-house AutoCAD programmer, so they blast you with all these low level file access details like BlockTables and SymbolTables. However, this isn't surprising, since I believe AutoDesk eat their own dog food and use the same API internally. That doesn't make it any easier to learn and use, though.

RealDWG (or ObjectARX, which is the underlying API that is wrapped with .NET wrapper classes) reads a DWG file into an internal "database". Everything is basically an ObjectId, which is a short stub object that you give to a Transaction object that will get you the actual real object. Objects are nested inside objects, which are nested inside more objects, and none of it typesafe, as Transaction returns objects as their top level DBObject class. So you're constantly casting to the actual concrete type you want. Casting all over the place == bad.

Anyway, I found that navigating around a RealDWG object graph was a pain. For example, to find BlockTableRecords (which are inside a BlockTable) that contain AttributeDefinitions (don't worry about what those are; it's not important) you need to do something like this:

01 | BlockTable blockTable = (BlockTable)transaction.GetObject(db.BlockTableId, OpenMode.ForRead); |

02 | |

03 | foreach (ObjectId objectId in blockTable) |

04 | { |

05 | DBObject dbObject = transaction.GetObject(objectId, OpenMode.ForRead); |

06 |

07 | BlockTableRecord record = (BlockTableRecord)dbObject; |

08 | |

09 | if (record.HasAttributeDefinitions == false) |

10 | continue; |

11 |

12 | //Do what you need to here... |

13 | } |

That's really verbose and messy, with a lot of code just dedicated to opening objects and casting them, and filtering. It was annoying me, so I refactored it and wrote the following sexy method that uses generics with delegates to clean that right up:

01 | private IEnumerable<T> Filter<T>(Predicate<T> predicate, IEnumerable realDwgEnumerable, Transaction transaction) |

02 | where T : class |

03 | { |

04 | foreach (ObjectId obj in realDwgEnumerable) |

05 | { |

06 | DBObject dbObject = transaction.GetObject(obj, OpenMode.ForRead); |

07 |

08 | T genericObj = dbObject as T; |

09 | if (genericObj == null) |

10 | continue; |

11 |

12 | if (predicate != null && predicate(genericObj) == false) |

13 | continue; |

14 |

15 | yield return genericObj; |

16 | } |

17 | } |

The method is generic and takes an IEnumerable (which is the non-generic interface that all the RealDWG stuff that you can iterate over implements) and a Transaction (to open objects from ObjectId stubs with). Additionally, it takes a Predicate<T> that allows you to specify a condition on which concrete objects are included in the final set. It returns an IEnumerable of the generic type T.

What the method will do is iterate over the IEnumerable and pull out all the objects that match the generic type that you define when you call the method. Additionally, it will return only those objects that match your predicate. The method uses the yield return keywords to lazy return results as the returned IEnumerable is iterated over.

Here's the above messy example all sexed up by using this method:

1 | BlockTable blockTable = (BlockTable)transaction.GetObject(db.BlockTableId, OpenMode.ForRead); |

2 |

3 | IEnumerable<BlockTableRecord> blockTableRecords = Filter<BlockTableRecord>(btr => btr.HasAttributeDefinitions, blockTable, transaction); |

4 |

5 | foreach (BlockTableRecord blockTableRecord in blockTableRecords) |

6 | { |

7 | //Do what you need to here... |

8 | } |

Just like using LINQ, the above snippet (which looks longer than it really is thanks to wrapping) simply declares that it wants all BlockTableRecords in the BlockTable that have attribute definitions (using a lambda expression). It's much neater, if only because it shifts the filtering code out of the way into the Filter method, so that it doesn't clutter up what I'm trying to do. It also makes the foreach type-safe, because now we're iterating over an IEnumerable<T> rather than a non-generic IEnumerable. Worst case: the IEnumerable<T> is empty. No InvalidCastExceptions here.

Another place where this method is awesome is when you've got lots of different typed objects getting returned as you iterate over the RealDWG IEnumerable object, which happens a lot. Using this method you can very simply get the type of object you're looking for by doing this:

1 | IEnumerable<TypeIWant> objectsIWant = Filter<TypeIWant>(null, blockTableRecord, transaction); |

Optimally you use the overload that doesn't include the Predicate<T> as a parameter, instead of passing null as the predicate (the overload passes null for you). I didn't show that here, but it's in my code.

Wrapping up, this again confirms how much I love C# 3.0 (and soon 4.0!). All the new language features let you do some simply awesome things that you just can't do in aging languages like Java (Java doesn't even have delegates, let alone lambda expressions!).

Variable Capture in C# with Anonymous Delegates

April 06, 2009 2:00 PM by Daniel Chambers

Anonymous delegates (or, if you're using C# 3.0, lambda expressions) seem fairly simple at first sight. It's just a class-less method that you can pass around and call at will. However, there are some intricacies that aren’t apparent unless you look deeper. In particular, what happens if you use a variable from outside the anonymous delegate inside the delegate? What happens when that variable goes out of scope (say it’s a local variable and the method that contained it returned)?

I’ll run through some small examples that will explain something called “variable capture” and how it relates to anonymous delegates (and therefore, lambda expressions).

The code below for loops and adds a new lambda that returns the index variable from the for loop. After the loop has concluded, all the lambdas created are run and their results written to the console. FYI, Func<TResult> is a .NET built-in delegate that takes no parameters and returns TResult.

1 | List<Func<int>> funcs = new List<Func<int>>(); |

2 | for (int j = 0; j < 10; j++) |

3 | funcs.Add(() => j); |

4 | foreach (Func<int> func in funcs) |

5 | Console.WriteLine(func()); |

What will be outputted on the console when this code is run? The answer is ten 10s. Why is this? Because of variable capture. Each lambda has “captured” the variable j, and in essence, extended its scope to outside the for loop. Normally j would be thrown away at the end of the for loop. But because it has been captured, the delegates hold a reference to it. So its final value, after the loop has completed, is 10 and that’s why 10 has been outputted 10 times. (Also, j won’t be garbage collected until the lambda is, since it holds a reference to j.)

In this next example, I’ve added one line of seemingly redundant code, which assigns the j index variable to a temporary variable inside the loop body. The lambda then uses tempJ instead of j. This makes a massive difference to the final output!

1 | List<Func<int>> funcs = new List<Func<int>>(); |

2 | for (int j = 0; j < 10; j++) |

3 | { |

4 | int tempJ = j; |

5 | funcs.Add(() => tempJ); |

6 | } |

7 | foreach (Func<int> func in funcs) |

8 | Console.WriteLine(func()); |

This piece of code outputs 0-9 on the console. So why is this so different to the last example? Whereas j’s scope is over the whole for loop (it is the same variable across all loop iterations), tempJ is a new tempJ for every time the loop is run. This means that when the lambdas capture tempJ, they each capture a different tempJ that contains what j was for that particular iteration of the loop.

In this final example, the lambda is created and evaluated within the for loop (and no longer uses tempJ).

1 | for (int j = 0; j < 10; j++) |

2 | { |

3 | Func<int> func1 = () => j; |

4 | Console.WriteLine(func1()); |

5 | } |

This code is similar to the first example; the lambdas capture j whose scope is over the whole for loop. However, unlike the first example, this outputs 0-9 on the console. Why? Because the lambda is executed inside each iteration. So at the point at which each lambda is executed j is 0-9, unlike the first example where the lambdas weren’t executed until j was 10.

In conclusion, using these small examples I’ve shown the implications of variable capture. Variable capture happens when an anonymous delegate uses a variable from the scope outside of itself. This causes the delegate to “capture” the variable (ie hold a reference to it) and therefore the variable will not be garbage collected until the capturer delegate itself is garbage collected.

Value Type Boxing in C#

March 12, 2009 2:00 PM by Daniel Chambers

There are times when I am surprised because I come across some basic principle or feature in a programming language that I just didn't know about but really should have (see the "Generics and Type-Safety" blog for an example). The most recent example of this was in my Enterprise .NET lecture where they asked us to define what boxing and unboxing was. I'd heard of it in relation to Java, because Java has non-object value types that need to be converted to objects sometimes (the process of "boxing") so they can be used with Java's crappy generics system. But since, in C#, even an int is an object with methods, I assumed that boxing and unboxing was not done in C#.

I was wrong. C# indeed does boxing and unboxing! At first, this didn't make sense. My incomplete understanding of boxing (in relation to Java) was that value types were stored only on the stack (yes, this is a little inaccurate) and when you needed to put them on the heap, you had to box them. In C#, I thought everything was an object, so this process would have been redundant.

Wrong. .NET (and therefore C#) has value types, which are boxed and unboxed transparently by the CLR. Value types in C# derive from the ValueType class which itself derives from Object. Structs in C# are automatically derived from ValueType for you (therefore you cannot do inheritance with structs). Unlike in Java, value types are still objects: they can have methods, fields, properties, events, etc.

Why are value types good? When .NET deals with a value type, it stores the object's data inline in memory. This means when the variable is on the stack, the data is stored directly in stack-space. When the variable is inside a heap object, the data is stored directly inside the heap object. This is different to reference types, where instead of the data being stored inline, a pointer to the data which is somewhere on the heap is stored inline. This means it takes longer to access a reference type than a value type as you have to read the pointer, then read the location the pointer points to.

Boxing kills this performance increase you get when you use value types. When you box (or more accurately, the CLR boxes) a value type, it essentially wraps it in a reference type object that is stored on the heap and then uses a reference to point to it. Your value type is now a reference type. So not only do you need to look up a reference to get to the final data, you have to spend time creating the wrapper object at runtime.

When does boxing happen? The main place to watch out for is when you pass a value type around as Object. A common place this might happen is if you use ArrayList. If you do, it's time to move on. :) .NET 2.0 introduced generics and you should use them. Generics play nice with value types, so try using a List<T> instead.

So what do I mean when I say "generics play nice with value types"? Unlike Java, whose generics system sucks (it does type erasure, which is half-arsed generics), .NET understands generics at runtime. This means when you define, for example, a List<int>, .NET realises that int is a value type and then will allocate ints inline inside the List as per the "inline storage" explanation above. This is lots better than Java or ArrayList's behaviour, where each element in the array is a pointer to a location on the heap and because the value type that had been added has been boxed.

In hindsight, especially when I think about it all from a C++ perspective, I should have known C# did value type boxing. How could it have value types and not? But I guess I just didn't join the dots.

Sleep Display

November 01, 2008 2:00 PM by Daniel Chambers

I've always had a problem when I've left my computer on at night. My screens, the two 24" beasts that they are, light up my room like its 12 o'clock midday. It makes it rather difficult to get to sleep. My solution, in the past, was to temporarily change my Windows power configuration so that my monitor would sleep itself after one minute of inactivity. A clunky and annoying solution, to be sure.

This weekend, I'd had enough. I searched around on the net for a program that would allow me to sleep my displays immediately, without the need for dicking around in power configuration settings.

Sure enough, this bloke has a program that'll do just that. However, he expects to be paid twenty whole dollars for it. I choked when I saw that; turning off your screens cannot be so hard as to require a 20 dollar (US dollars as well!) payment for it. So I did some more searching. Sure enough, its quite literally one method call to send your displays to sleep.

So I whipped up a program to do it: Sleep Display. I won't repeat here what I've already written on its webpage, so pop on over there to read up on it.

For those interested, here is how you sleep your monitors in C# (note this is not actually a class from Sleep Display, I've cut out loads of code to just get it down to the bare essentials):

01 | using System; |

02 | using System.Runtime.InteropServices; |

03 | using System.Threading; |

04 | using System.Windows.Forms; |

05 | |

06 | namespace DigitallyCreated.SleepDisplay |

07 | { |

08 | public class Sleeper |

09 | { |

10 | //From WinUser.h |

11 | private const int WM_SYSCOMMAND = 0x0112; |

12 | private const int SC_MONITORPOWER = 0xF170; |

13 | //Not from WinUser.h (from MSDN doco about |

14 | //SC_MONITORPOWER) |

15 | private const int SC_MONITORPOWER_SHUTOFF = 2; |

16 | |

17 | public void SleepDisplay() |

18 | { |

19 | Form form = new Form(); |

20 | DefWindowProcA((int)form.Handle, WM_SYSCOMMAND, |

21 | SC_MONITORPOWER, SC_MONITORPOWER_SHUTOFF); |

22 | } |

23 | |

24 | [DllImport("user32", EntryPoint = "DefWindowProc")] |

25 | public static extern int DefWindowProcA(int hwnd, |

26 | int wMsg, int wParam, int lParam); |

27 | } |

28 | } |

Basically, all you're doing is sending a Windows message to the default window message handler by calling a Win32 API. The message simply tells Windows to sleep the monitor. Note the use of DllImport and public static extern. This is basically where you map a call to a native (probably C or C++) function into C#. C# will handle the loading of the DLL and the marshalling and the actual calling of the function for you. It's pretty nice.

The Form is needed because DefWindowProc requires a handle to a window and the easiest way I knew how to get one of those was to simply create a Form and get its Handle. DefWindowProc has its doco on MSDN here and the doco for the types of WM_SYSCOMMAND message you can send is here.

As you can see, the process is not rocket-science, nor is it worth 20 whole bucks. In fact, the installer I created was more of a pain than the app itself. I decided to try and use Windows Installer instead of NSIS. I used Windows Installer XML (WiX) which seems to be an open-source project from Microsoft that allows you to create Windows Installer packages and UIs by writing XML. It's also got a plugin for Visual Studio.

WiX is... alright. It's a real bitch to work with, simply because I'm nowhere near a Windows Installer pro so I don't really understand what's going on. Windows Installer is massive and I only scratched the surface of it when doing the installer for Sleep Display. The problem with WiX, really, is that it has a massive learning curve. If you're interested in learning more about WiX, I suggest you grab v3.0 (Beta) and install it, then go check out the (huge) tutorial they've got here. Then prepare to go to bed at 6.30AM after hours of pain like I did. :)

Generics and Type-Safety

September 06, 2008 3:00 PM by Daniel Chambers

I ran into a little issue at work to do with generics, inheritance and type-safety. Normally, I am an absolute supporter of type-safety in programming languages; I have always found that type safety catches bugs at compile-time rather than at run-time, which is always a good thing. However, in this particular instance (which I have never encountered before), type-safety plain got in my way.

Imagine you have a class Fruit, from which you derive a number of child classes such as Apple and Banana. There are methods that return Collection<Apple> and Collection<Banana>. Here's a code example (Java), can you see anything wrong with it (excluding the horrible wantApples/wantBananas code... I'm trying to keep the example small!)?

01 | Collection<Fruit> fruit; |

02 |

03 | if (wantApples) |

04 | fruit = getCollectionOfApple(); |

05 | else if (wantBananas) |

06 | fruit = getCollectionOfBanana(); |

07 | else |

08 | throw new BabyOutOfPramException(); |

09 |

10 | for (Fruit aFruit : fruit) |

11 | aFruit.eat(); |

Look OK? It's not. The error is that you cannot hold a Collection<Apple> or Collection<Banana> as a Collection<Fruit>! Why not, eh? Both Bananas and Apples are subclasses of Fruit, so why isn't a Collection of Banana a Collection of Fruit? All Bananas in the Collection are Fruit!

At first I blamed this on Java's crappy implementation of generics. In Java, generics are a compiler feature and not natively supported in the JVM. This is the concept of "type-erasure", where all generic type information is erased during a compile. All your Collections of type T are actually just Collections of no type. The most frustrating place where this bites you is when you want to do this:

1 | interface MyInterface |

2 | { |

3 | private void myMethod(Collection<String> strings); |

4 | private void myMethod(Collection<Integer> numbers); |

5 | } |

Java will not allow that, as the two methods are indistinguishable after a compile, thanks to type-erasure. Those methods actually are:

1 | interface MyInterface |

2 | { |

3 | private void myMethod(Collection strings); |

4 | private void myMethod(Collection numbers); |

5 | } |

and you get a redefinition error. Of course, .NET since v2.0 has treated generics as a first-class construct inside the CLR. So the equivalent to the above example in C# would work fine since a Collection<String> is not the same as a Collection<Integer>.

Anyway, enough ranting about Java. I insisted to my co-worker that I was sure C# with its non-crappy generics would have allowed us to assign a Collection<Apple> to a Collection<Fruit>. However, I was totally wrong. A quick Google search told me that you absolutely cannot allow a Collection<Apple> to be assigned to a Collection<Fruit> or it will break programming. This is why:

1 | Collection<Fruit> fruit; |

2 | Collection<Apple> apples = new ArrayList<Apple>(); |

3 | fruit = apples; //Assume this line works OK |

4 | fruit.add(new Banana()); |

5 |

6 | for (Apple apple : apples) |

7 | apple.eat(); |

Can you see the problem? By storing a Collection<Apple> as a Collection<Fruit> we suddenly make it OK to add any type of Fruit to the Collection, such as the Banana on line 4. Then, when we foreach through the apples Collection (which now contains a Banana, thanks to line 4) we would get a ClassCastException because, holy crap, a Banana is not an Apple! We just broke programming.

So how can we make this work? In Java, we can use wildcards:

01 | Collection<? extends Fruit> fruit; |

02 |

03 | if (wantApples) |

04 | fruit = getCollectionOfApple(); |

05 | else if (wantBananas) |

06 | fruit = getCollectionOfBanana(); |

07 | else |

08 | throw new BabyOutOfPramException(); |

09 |

10 | for (Fruit aFruit : fruit) |

11 | aFruit.eat(); |

Disappointingly, C# does not support the concept of wildcards. The best I could do was this:

01 | private void MyMethod() |

02 | { |

03 | if (_WantApples) |

04 | EatFruit(GetEnumerableOfApples()); |

05 | else if (_WantBananas) |

06 | EatFruit(GetEnumerableOfBananas()); |

07 | else |

08 | throw new BabyOutOfPramException(); |

09 | } |

10 |

11 | private void EatFruit<T>(IEnumerable<T> fruit) where T : Fruit |

12 | { |

13 | foreach (T aFruit in fruit) |

14 | aFruit.eat(); |

15 | } |

Basically, we're declaring a generic method that takes any type of Fruit, and then the compiler is inferring the type to be used for the EatFruit method by looking at the return type of the two getter methods. This code is not as nice as the Java code.

You must be wondering, however, what if we added this line to the bottom of the above Java code:

1 | fruit.add(new Banana()); |

What would happen is that Java would issue an error. This is because the generic type "? extends Fruit" actually means "unknown type that extends Fruit". The "unknown type" part is crucial. Because the type is unknown, the add method for Collection<? extends Fruit> actually looks like this:

1 | public boolean add(null o); |

Yes! The only thing that the add method can take is null, because a reference to anything can always be set to null. So even though we don't know the type, we know that no matter what type it is, it can always be null. Therefore, trying to pass a Banana into add() would fail.

The foreach loop works okay because the iterator that works inside the foreach loop must always return something that is at least a Fruit thanks to the "extends Fruit" part of the type definition. So it's okay to set a Fruit reference using a method that returns "? extends Fruit" because the thing that is returned must be at least a Fruit.

Although obviously wrong now, the assignment of Collection<Apple> to Collection<Fruit> seemed to make sense when I first encountered it. This has enlightened me to the fact that there are nooks and crannies in both C# and Java that I have yet to explore.